Introduction #

The authors of the ADE20K dataset address the significant challenge of scene parsing, encompassing the recognition and segmentation of objects and stuff within images, a vital task in the domain of computer vision. Despite the efforts made by the research community to gather data, there remains a scarcity of image datasets that comprehensively cover a broad spectrum of scenes and object categories, along with detailed and dense annotations suitable for scene parsing. To fill this void, the authors introduce the ADE20K dataset. This dataset features diverse annotations that span scenes, objects, parts of objects, and, intriguingly, even parts of parts. In order to facilitate benchmarking for scene parsing, the ADE20K dataset includes 150 object and stuff classes, and various segmentation baseline models undergo evaluation using this benchmark. You can access the hierarchy of classes on the official website of the dataset.

In the realm of computer vision, gaining a semantic understanding of visual scenes is a paramount goal. Contemporary robotic systems can accurately predict scene categories from visual scenes such as living rooms. However, for seamless navigation and manipulation within scenes, robots must digest an extensive amount of information. This entails recognizing and localizing not only primary objects like sofas, tables, and TVs but also their parts, such as the seat of a chair or the handle of a cup. Furthermore, these systems need to segment elements like floors, walls, and ceilings to facilitate spatial navigation.

Recognizing and segmenting objects and stuff within images remains a pivotal problem in scene understanding. Beyond the domain of image-level recognition, scene parsing necessitates denser scene annotations encompassing a broad spectrum of objects. However, current datasets often possess a limited count of objects, and some objects may not represent the most common elements encountered in the real world. Moreover, the datasets are confined to specific scene categories.

The dataset summary indicates that the training set comprises 20,210 images, the validation set contains 2,000 images, and the testing set contains 3,000 images. All images undergo exhaustive annotations for objects, with many objects also annotated for their respective parts. Each object includes additional information about attributes like occlusion or cropping. While the validation set is meticulously annotated with parts, the annotations for parts are not exhaustive across images in the training set. The annotations within the dataset are continually expanding, reflecting ongoing efforts.

The process of image annotation involved sourcing images from datasets such as LabelMe, SUN, and Places, with a focus on capturing a diverse set of scenes that cover the 900 scene categories delineated in the SUN database. These images were annotated by a single dedicated expert worker using the LabelMe interface. Annotations were divided into three types: object segments with associated names, object parts, and attributes. Notably, all object instances underwent independent segmentation to enable the dataset’s use for training and evaluating detection or segmentation algorithms. Unlike datasets such as COCO, Pascal, or Cityscape which begin by defining a predefined set of object categories, this dataset embraces the challenge of labeling all objects within a scene, which frequently results in the emergence of new object categories. To manage this dynamic scenario, the annotator curated a dictionary of visual concepts, consistently adding new classes to maintain object naming coherence.

Annotation interface, the list of the objects and their associated parts in the image.

The authors also addressed the associations of object parts, which might themselves have parts, contributing to a hierarchical structure. This hierarchy reaches a depth of 3 and is elaborated upon in supplementary materials. For instance, a door can be an object (in an indoor picture), or a part (when it is the door of a car). Some objects are always parts (e.g., a leg, a hand, …), although, in some cases, they can appear detached from the whole (e.g., a car wheel inside a garage), and some objects are never parts (e.g., a person, a truck, …). The same name class (e.g., door) can correspond to several visual categories depending on which object it is a part of. For instance, a car door is visually different from a cabinet door or a building door. However, they share similar affordances. The value proportionClassIsPart© can be used to decide if a class behaves mostly as an object or as a part. When an object is not part of another object its segmentation mask will appear inside *_seg.png. If the class behaves as a part, then the segmentation mask will appear inside *_seg_parts.png. Correctly detecting an object requires classifying if the object is behaving as an independent object or if it is a part of another object.

An in-depth analysis of annotation consistency underscores the complexities arising when class lists are open-ended. While labeling tasks with a fixed list of object classes is relatively straightforward, challenges emerge when the list of classes grows unbounded due to new categories appearing over time. The authors conducted an assessment by re-annotating a subset of images from the validation set after a six-month interval. Notably, 82.4% of the pixels retained the same label across both annotations. The remaining 17.6% of pixels exhibited errors, which were classified into three error types: segmentation quality, object naming, and segmentation quantity. The median error rates for these error types were 4.8%, 0.3%, and 2.6%, respectively, indicating that the mean error rate was influenced by a few images. The most common error type was identified as segmentation quality.

(a) Object classes are sorted by frequency. Only the top 270 classes with more than 100 annotated instances are shown. 68 classes have more than 1000 segmented instances. (b) Frequency of parts grouped by objects. There are more than 200 object classes with annotated parts. Only objects with 5 or more parts are shown in this plot (we show at most 7 parts for each object class). (c) Objects ranked by the number of scenes they are part of. (d) Object parts are ranked by the number of objects they are part of. (e) Examples of objects with doors. The bottom-right image is an example where the door does not behave as a part.

Moreover, dataset statistics reveal the distribution of ranked object frequencies, closely resembling Zipf’s law, which is often observed when objects are comprehensively annotated. Distributions of annotated parts, object sharing across scenes, parts shared by objects, and the variability in part appearances were also examined. Additional analyses demonstrated the mode of object segmentations, distributions of distinct classes and instances, and distributions of parts within the dataset. In particular, the authors highlighted the emergence of new object classes as annotation progresses, quantifying the probability of encountering a new class after labeling a certain number of instances.

Summary #

ADE20K is a dataset for instance segmentation, semantic segmentation, and object detection tasks. It is applicable or relevant across various domains. Also, it is used in the robotics industry.

The dataset consists of 27574 images with 712812 labeled objects belonging to 3579 different classes including wall, sky, floor, and other: window, ceiling, building, door, person, tree, trees, road, chair, picture, plant, car, cabinet, sidewalk, table, sign, ground, grass, curtain, mountain, column, street light, table lamp, bed, mirror, and 3551 more.

Images in the ADE20K dataset have pixel-level instance segmentation annotations. Due to the nature of the instance segmentation task, it can be automatically transformed into a semantic segmentation (only one mask for every class) or object detection (bounding boxes for every object) tasks. All images are labeled (i.e. with annotations). There are 2 splits in the dataset: training (25574 images) and validation (2000 images). Additionally, every image contains information about scene. The dataset was released in 2019 by the Massachusetts Institute of Technology, USA and University of Toronto, Canada.

Here is the visualized example grid with animated annotations:

Explore #

ADE20K dataset has 27574 images. Click on one of the examples below or open "Explore" tool anytime you need to view dataset images with annotations. This tool has extended visualization capabilities like zoom, translation, objects table, custom filters and more. Hover the mouse over the images to hide or show annotations.

Class balance #

There are 3579 annotation classes in the dataset. Find the general statistics and balances for every class in the table below. Click any row to preview images that have labels of the selected class. Sort by column to find the most rare or prevalent classes.

Class ㅤ | Images ㅤ | Objects ㅤ | Count on image average | Area on image average |

|---|---|---|---|---|

wall➔ polygon | 14701 | 39322 | 2.67 | 39.16% |

sky➔ polygon | 11831 | 12699 | 1.07 | 28.26% |

floor➔ polygon | 11705 | 14873 | 1.27 | 19.38% |

window➔ polygon | 9932 | 53207 | 5.36 | 6.86% |

ceiling➔ polygon | 8246 | 9838 | 1.19 | 13.72% |

building➔ polygon | 7364 | 18253 | 2.48 | 37.84% |

door➔ polygon | 7263 | 17272 | 2.38 | 5.88% |

person➔ polygon | 6897 | 30050 | 4.36 | 7.88% |

tree➔ polygon | 5885 | 16837 | 2.86 | 9.96% |

trees➔ polygon | 5660 | 10546 | 1.86 | 17.27% |

Images #

Explore every single image in the dataset with respect to the number of annotations of each class it has. Click a row to preview selected image. Sort by any column to find anomalies and edge cases. Use horizontal scroll if the table has many columns for a large number of classes in the dataset.

Class sizes #

The table below gives various size properties of objects for every class. Click a row to see the image with annotations of the selected class. Sort columns to find classes with the smallest or largest objects or understand the size differences between classes.

Class | Object count | Avg area | Max area | Min area | Min height | Min height | Max height | Max height | Avg height | Avg height | Min width | Min width | Max width | Max width |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

window polygon | 53207 | 1.26% | 99.53% | 0% | 3px | 0.24% | 1648px | 100% | 115px | 12.28% | 2px | 0.18% | 2046px | 100% |

wall polygon | 39322 | 14.51% | 99.64% | 0% | 1px | 0.12% | 2200px | 100% | 407px | 52.19% | 1px | 0.08% | 2200px | 100% |

person polygon | 30050 | 1.77% | 71.86% | 0% | 1px | 0.25% | 2086px | 100% | 166px | 20.8% | 1px | 0.19% | 1639px | 99.83% |

building polygon | 18253 | 15.18% | 99.64% | 0% | 2px | 0.27% | 2200px | 100% | 413px | 40.73% | 2px | 0.12% | 2200px | 100% |

door polygon | 17272 | 2.43% | 88.51% | 0% | 6px | 0.91% | 2200px | 100% | 249px | 27.85% | 2px | 0.24% | 1599px | 100% |

tree polygon | 16837 | 3.43% | 84.7% | 0% | 2px | 0.12% | 2188px | 100% | 305px | 31.12% | 1px | 0.1% | 2199px | 100% |

chair polygon | 16492 | 1.48% | 29.45% | 0% | 1px | 0.19% | 1366px | 99.69% | 155px | 19.15% | 1px | 0.07% | 1353px | 100% |

car polygon | 15431 | 1.41% | 98.72% | 0% | 4px | 0.39% | 1648px | 100% | 101px | 9.76% | 3px | 0.34% | 1996px | 100% |

floor polygon | 14873 | 15.07% | 99.51% | 0% | 1px | 0.06% | 2045px | 100% | 214px | 29.36% | 1px | 0.05% | 2200px | 100% |

sky polygon | 12699 | 26.05% | 99.71% | 0% | 2px | 0.24% | 2197px | 100% | 316px | 42.28% | 1px | 0.1% | 2200px | 100% |

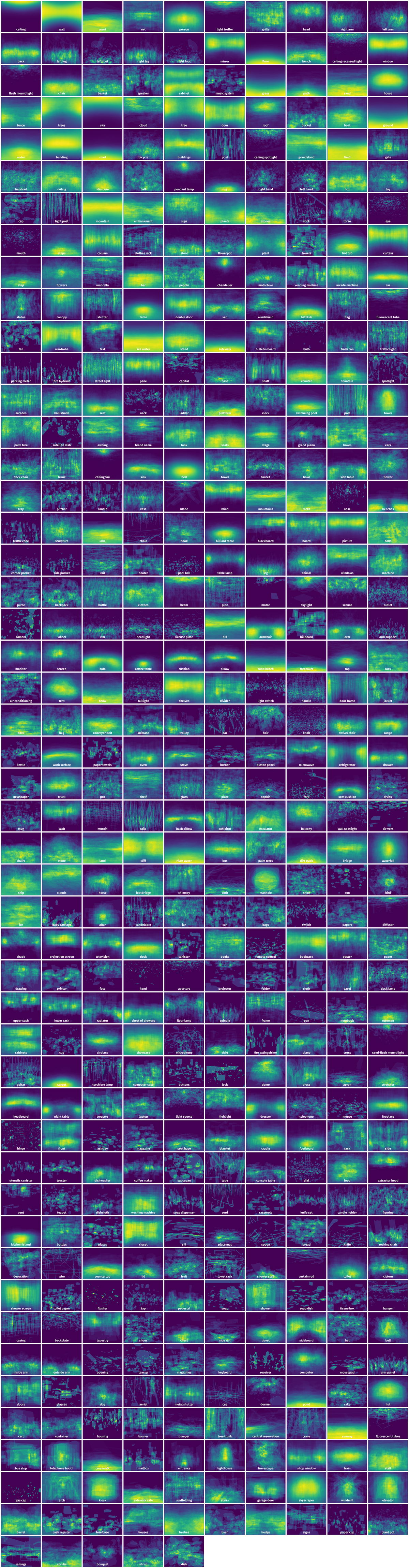

Spatial Heatmap #

The heatmaps below give the spatial distributions of all objects for every class. These visualizations provide insights into the most probable and rare object locations on the image. It helps analyze objects' placements in a dataset.

Objects #

Table contains all 96522 objects. Click a row to preview an image with annotations, and use search or pagination to navigate. Sort columns to find outliers in the dataset.

Object ID ㅤ | Class ㅤ | Image name click row to open | Image size height x width | Height ㅤ | Height ㅤ | Width ㅤ | Width ㅤ | Area ㅤ |

|---|---|---|---|---|---|---|---|---|

1➔ | ceiling polygon | ADE_val_00000488.jpg | 256 x 256 | 51px | 19.92% | 256px | 100% | 12.1% |

2➔ | wall polygon | ADE_val_00000488.jpg | 256 x 256 | 148px | 57.81% | 102px | 39.84% | 19.19% |

3➔ | wall polygon | ADE_val_00000488.jpg | 256 x 256 | 146px | 57.03% | 156px | 60.94% | 24.68% |

4➔ | window polygon | ADE_val_00000488.jpg | 256 x 256 | 115px | 44.92% | 34px | 13.28% | 5.26% |

5➔ | cabinet polygon | ADE_val_00000488.jpg | 256 x 256 | 76px | 29.69% | 62px | 24.22% | 6.38% |

6➔ | cabinet polygon | ADE_val_00000488.jpg | 256 x 256 | 66px | 25.78% | 53px | 20.7% | 4.12% |

7➔ | cabinet polygon | ADE_val_00000488.jpg | 256 x 256 | 43px | 16.8% | 53px | 20.7% | 2.79% |

8➔ | microwave polygon | ADE_val_00000488.jpg | 256 x 256 | 37px | 14.45% | 57px | 22.27% | 2.77% |

9➔ | cabinet polygon | ADE_val_00000488.jpg | 256 x 256 | 87px | 33.98% | 67px | 26.17% | 4.98% |

10➔ | refrigerator polygon | ADE_val_00000488.jpg | 256 x 256 | 170px | 66.41% | 48px | 18.75% | 11.62% |

License #

Images

MIT, CSAIL does not own the copyright of the images. If you are a researcher or educator who wish to have a copy of the original images for non-commercial research and/or educational use, we may provide you access by filling a request in our site. You may use the images under the following terms:

- Researcher shall use the Database only for non-commercial research and educational purposes. MIT makes no representations or warranties regarding the Database, including but not limited to warranties of non-infringement or fitness for a particular purpose.

- Researcher accepts full responsibility for his or her use of the Database and shall defend and indemnify MIT, including their employees, Trustees, officers and agents, against any and all claims arising from Researcher’s use of the Database, including but not limited to Researcher’s use of any copies of copyrighted images that he or she may create from the Database.

- Researcher may provide research associates and colleagues with access to the Database provided that they first agree to be bound by these terms and conditions.

- MIT reserves the right to terminate Researcher’s access to the Database at any time.

- If Researcher is employed by a for-profit, commercial entity, Researcher’s employer shall also be bound by these terms and conditions, and Researcher hereby represents that he or she is fully authorized to enter into this agreement on behalf of such employer.

Software and Annotations

This website, image annotations and the software provided belongs to MIT CSAIL and is licensed under a Creative Commons BSD-3 License Agreement

Copyright 2019 MIT, CSAIL

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

- Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

- Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

- Neither the name of the copyright holder nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

Citation #

If you make use of the ADE20K data, please cite the following reference:

@dataset{ADE20K,

author={Bolei Zhou and Hang Zhao and Xavier Puig and Tete Xiao and Sanja Fidler and Adela Barriuso and Antonio Torralba},

title={ADE20K},

year={2019},

url={https://groups.csail.mit.edu/vision/datasets/ADE20K/}

}

If you are happy with Dataset Ninja and use provided visualizations and tools in your work, please cite us:

@misc{ visualization-tools-for-ade20k-dataset,

title = { Visualization Tools for ADE20K Dataset },

type = { Computer Vision Tools },

author = { Dataset Ninja },

howpublished = { \url{ https://datasetninja.com/ade20k } },

url = { https://datasetninja.com/ade20k },

journal = { Dataset Ninja },

publisher = { Dataset Ninja },

year = { 2026 },

month = { feb },

note = { visited on 2026-02-15 },

}Download #

Dataset ADE20K can be downloaded in Supervisely format:

As an alternative, it can be downloaded with dataset-tools package:

pip install --upgrade dataset-tools

… using following python code:

import dataset_tools as dtools

dtools.download(dataset='ADE20K', dst_dir='~/dataset-ninja/')

Make sure not to overlook the python code example available on the Supervisely Developer Portal. It will give you a clear idea of how to effortlessly work with the downloaded dataset.

The data in original format can be downloaded here.

Disclaimer #

Our gal from the legal dep told us we need to post this:

Dataset Ninja provides visualizations and statistics for some datasets that can be found online and can be downloaded by general audience. Dataset Ninja is not a dataset hosting platform and can only be used for informational purposes. The platform does not claim any rights for the original content, including images, videos, annotations and descriptions. Joint publishing is prohibited.

You take full responsibility when you use datasets presented at Dataset Ninja, as well as other information, including visualizations and statistics we provide. You are in charge of compliance with any dataset license and all other permissions. You are required to navigate datasets homepage and make sure that you can use it. In case of any questions, get in touch with us at hello@datasetninja.com.