Introduction #

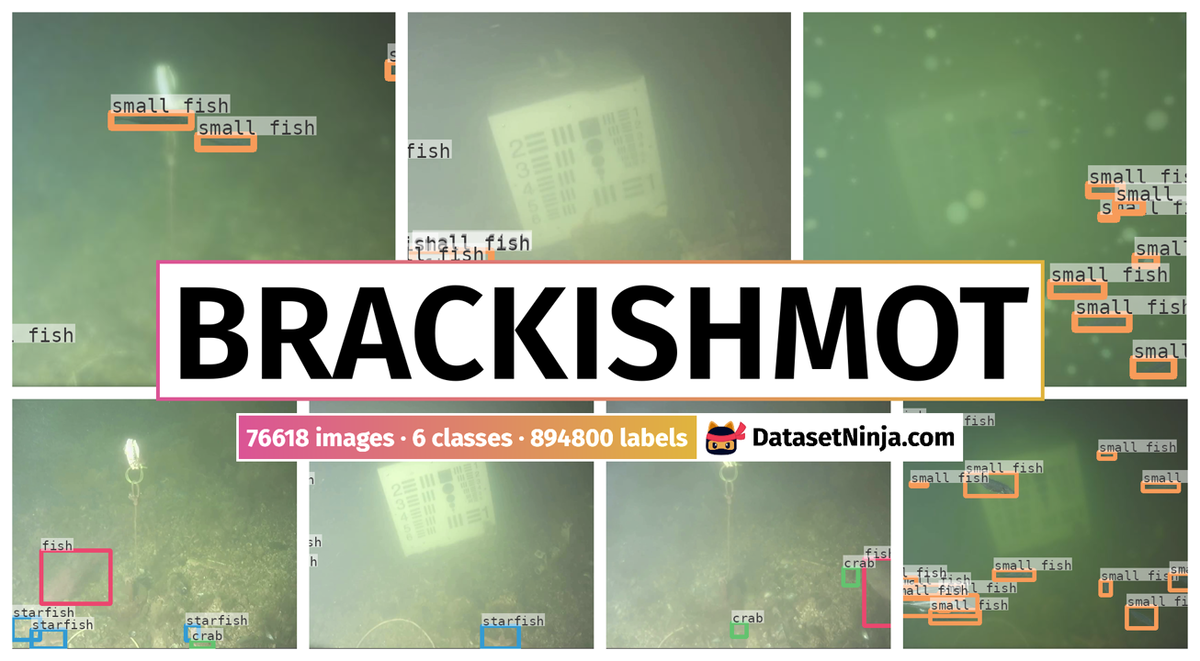

The authors propose a novel multi-object tracking (MOT) dataset of marine organisms in the wild named BrackishMOT: The Brackish Multi-Object Tracking Dataset. It describes the task of obtaining the trajectories of all objects in the scene. The dataset focused on tracking schools of small fish, which is a notoriously difficult MOT task. BrackishMOT consists of 98 sequences and six different classes captured in the wild. Additionally, the authors propose a framework for creating synthetic sequences in order to expand the dataset. The framework consists of animated fish models and realistic underwater environments.

Motivation

For millennia, humans have depended on oceans as a reliable food source. However, marine ecosystems are now rapidly declining due to human activities. This poses a significant challenge for coastal communities worldwide, which heavily rely on fish as their primary food source, and for overall biodiversity. The seriousness of this issue is underscored by the United Nations’ inclusion of Life Below Water as the fourteenth Sustainable Development Goal. The growing emphasis on monitoring ocean health has placed immense pressure on marine researchers to collect data at an unprecedented rate. However, traditional methods of gathering marine data are often labor-intensive, intrusive, and not easily scalable, as they involve manually catching and measuring organisms. Therefore, it’s imperative to develop assistive solutions that optimize and scale data collection in marine environments. Over the past decade, there has been a remarkable advancement in computer vision solutions, driven by the use of powerful graphics processing units (GPUs) and the widespread adoption of deep learning algorithms. At the same time, underwater cameras have become substantially more efficient and affordable. This presents an opportunity for marine researchers to leverage both cameras and computer vision to enhance data collection. However, this integration faces a significant obstacle: the lack of marine datasets for training and evaluating computer vision models tailored to underwater environments. Tracking is a core component in marine research and can be used for multiple purposes such as counting or conducting behavioral analysis. Manually annotated datasets are critical and necessary for evaluating the performance of trackers on data from the wild. However, they are not scalable and do not necessarily generalize well to environments that are not included in the dataset.

Dataset description

The authors explore the potential of using synthetic underwater sequences to train multi-object trackers. They present a framework designed to generate synthetic sequences resembling the BrackishMOT environment. The study delves into an analysis of the impact of key factors specifically, turbidity, floating particles, and background on tracker performance.

The authors present and publish a bounding box annotated underwater multi-object tracking dataset captured in the wild named BrackishMOT, together with a synthetic framework for generating more data.

The authors suggest expanding the Brackish Dataset to encompass a Multiple Object Tracking (MOT) task. Consequently, they furnish a fresh array of ground truth annotations for each sequence, following the MOTChallenge annotation format. Furthermore, they introduce nine additional sequences concentrating on the small fish category, thereby augmenting the total sequences for the MOT task within the Brackish Dataset to 98, hereby referred to as BrackishMOT. The inclusion of the small fish category is particularly pertinent for the MOT task, as it encompasses species known for their social and schooling behaviors.

Image samples from the Brackish Dataset. In a majority of the sequences containing the small fish class, there are multiple specimens forming a school of fish.

The dataset is imbalanced with few occurrences of the shrimp, fish, and jellyfish classes. Furthermore, as the number of bounding boxes and frame occurrences are equal the authors can decipher that these three classes occur in the sequences as single objects. As the small fish class is the only class that exhibits erratic motion and appears in groups it is deemed the most interesting class with respect to MOT.

Plots describing the composition of the brackishMOT dataset with respect to motion and class distribution. For both plots, the data is from all the sequences.

Synthetic data framework

The authors constructed their synthetic framework within the Unity game engine, leveraging its built-in rendering pipeline. The framework revolves around three primary components: generating lifelike fish meshes, simulating fish behavior, and crafting a visually convincing underwater setting. They offer customizable options for each component, allowing users to tailor the synthetic environment to resemble the BrackishMOT data. Importantly, the framework is designed to be highly adaptable, enabling easy extension to accommodate other species, behavior models, and environments.

Fish model. The model chosen for this project was sourced from a database of fish images and photogrammetry 3D reconstructions. It was specifically selected for its visual similarity to a stickleback, a common member of the small fish class frequently found schooling in the sequences of the Brackish dataset, as noted by the authors. The 3D input model underwent decimation to reduce its complexity to 11,000 vertices. To retain finer details of the mesh, a normal map was generated from a high-resolution texture. Subsequently, the model was rigged using Blender’s bone system to facilitate fluid animations of both the body and tail. The number of fish spawned in each sequence is randomly determined within a range of 4 to 50, reflecting the diversity observed in the BrackishMOT sequences. Additionally, the initial pose, scale, and appearance including texture albedo and glossiness of each fish vary among the sequences.

Illustration of the stickle back fish model used in the framework. (a) Initial high resolution model and (b) decimated and rigged model for Unity.

Behavior model. To emulate realistic fish schooling behavior, the authors employ a boid-based approach. Each fish in the simulation takes into account the position and direction of all nearby fish. The velocity and heading of each fish are determined by four key factors: separation (s), cohesion (k), alignment (m), and leader (l). Separation ensures that each fish avoids collisions with its neighbors. Cohesion acts as a force that pulls fish towards the center of the group. Alignment encourages individual fish to match the velocity of their neighbors. The leader factor indicates the direction towards which a designated leader is heading. For each fish, the leader is selected as the neighbor whose heading vector most closely aligns with the fish’s own heading vector.

The surrounding environment. To explore how environmental changes affect tracker performance, the authors devised a synthetic environment based on three variables: turbidity, background, and distractors. Turbidity represents microscopic particles suspended in water, akin to a fog that becomes denser with increasing distance from the camera. This phenomenon significantly impacts visibility in the BrackishMOT sequences. The authors simulated turbidity using a custom Unity material with adjustable transparency, coupled with post-processing effects like depth of field and color grading. The material’s color ranges from grey to green, mirroring the turbidity observed in BrackishMOT sequences. Both color and intensity vary across different generated sequences. For background scenes, the authors utilized videos from the Brackish dataset devoid of fish to enhance realism. They varied background sequences through saturation, color, and blur adjustments. In the absence of a specific background, a monotone color matching the turbidity hue is employed. Additionally, distractors, representing floating particles, were introduced. In shallow waters with strong currents like those in BrackishMOT sequences, suspended plant materials and sediments often obscure the camera view. To simulate this, distractors spheres of varying size, transparency, and color are implemented. The color spectrum for distractors aligns with turbidity and monotone backgrounds. Their quantity varies across sequences, with each distractor randomly positioned and moved between frames. These environmental variables collectively enrich the synthetic scene with complexity akin to real-world scenarios. The combination of these variables yields eight distinct synthetic environments. Each generated video sequence lasts 10 seconds and comprises 150 frames at a frame rate of 15 FPS, closely resembling BrackishMOT sequences. The authors produced 50 sequences for each environment variation. Importantly, the synthetic framework’s flexibility allows for adjustments to fit diverse underwater environments. For instance, altering the video background or introducing new models can significantly alter sequence visuals.

Visualisation of different conditions of the synthetic environment. (a) Plain background, no turbidity, no distractors (Synth). (b) Video background, no turbidity, no distractors (SynthB). (c) Plain background, turbidity, no distractors (SynthT). (d) Plain background, no turbidity, with distractors (SynthD). (e) Plain background, with turbidityand distractors (SynthDT). (f) Video background with turbidity, but without distractors (SynthBT). (g) Video background with distractor, but no turbidity (SynthBD). (h) Video background with turbidity and distractors (SynthBTD).

Summary #

BrackishMOT: The Brackish Multi-Object Tracking Dataset is a dataset for an object detection task. It is used in the environmental research, and in the fishery industry.

The dataset consists of 76618 images with 894800 labeled objects belonging to 6 different classes including small fish, crab, starfish, and other: fish, shrimp, and jellyfish.

Images in the BrackishMOT dataset have bounding box annotations. There are 10570 (14% of the total) unlabeled images (i.e. without annotations). There are 2 splits in the dataset: train (73131 images) and test (3487 images). Alternatively, the dataset could be split into 2 images types: synthetic (60000 images) and real (16618 images), or into 8 conditions of synthetic environment: plain background, no turbidity, no distractors (7500 images), plain background, no turbidity, with distractors (7500 images), plain background, turbidity, no distractors (7500 images), plain background, with turbidityand distractors (7500 images), video background, no turbidity, no distractors (7500 images), video background with distractor, but no turbidity (7500 images), video background with turbidity and distractors (7500 images), and video background with turbidity, but without distractors (7500 images). Additionally, every image marked with its sequence tag, labels marked with target id. Explore its in Supervisely labelling tool. The dataset was released in 2023 by the Aalborg University, Denmark and Pioneer Center for AI, Denmark.

Explore #

BrackishMOT dataset has 76618 images. Click on one of the examples below or open "Explore" tool anytime you need to view dataset images with annotations. This tool has extended visualization capabilities like zoom, translation, objects table, custom filters and more. Hover the mouse over the images to hide or show annotations.

Class balance #

There are 6 annotation classes in the dataset. Find the general statistics and balances for every class in the table below. Click any row to preview images that have labels of the selected class. Sort by column to find the most rare or prevalent classes.

Class ㅤ | Images ㅤ | Objects ㅤ | Count on image average | Area on image average |

|---|---|---|---|---|

small fish➔ rectangle | 59984 | 873191 | 14.56 | 4.24% |

crab➔ rectangle | 5363 | 11737 | 2.19 | 0.74% |

starfish➔ rectangle | 5223 | 7191 | 1.38 | 0.73% |

fish➔ rectangle | 1971 | 1971 | 1 | 3.6% |

shrimp➔ rectangle | 403 | 403 | 1 | 0.51% |

jellyfish➔ rectangle | 307 | 307 | 1 | 0.36% |

Co-occurrence matrix #

Co-occurrence matrix is an extremely valuable tool that shows you the images for every pair of classes: how many images have objects of both classes at the same time. If you click any cell, you will see those images. We added the tooltip with an explanation for every cell for your convenience, just hover the mouse over a cell to preview the description.

Images #

Explore every single image in the dataset with respect to the number of annotations of each class it has. Click a row to preview selected image. Sort by any column to find anomalies and edge cases. Use horizontal scroll if the table has many columns for a large number of classes in the dataset.

Object distribution #

Interactive heatmap chart for every class with object distribution shows how many images are in the dataset with a certain number of objects of a specific class. Users can click cell and see the list of all corresponding images.

Class sizes #

The table below gives various size properties of objects for every class. Click a row to see the image with annotations of the selected class. Sort columns to find classes with the smallest or largest objects or understand the size differences between classes.

Class | Object count | Avg area | Max area | Min area | Min height | Min height | Max height | Max height | Avg height | Avg height | Min width | Min width | Max width | Max width |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

small fish rectangle | 873191 | 0.31% | 100% | 0% | 1px | 0.18% | 544px | 100% | 24px | 4.19% | 1px | 0.1% | 960px | 100% |

crab rectangle | 11737 | 0.34% | 37.92% | 0.04% | 21px | 1.94% | 999px | 92.5% | 68px | 6.28% | 29px | 1.51% | 787px | 40.99% |

starfish rectangle | 7191 | 0.55% | 1.43% | 0.13% | 45px | 4.17% | 165px | 15.28% | 91px | 8.41% | 45px | 2.34% | 193px | 10.05% |

fish rectangle | 1971 | 3.6% | 31.71% | 0.01% | 17px | 1.57% | 827px | 76.57% | 213px | 19.75% | 11px | 0.57% | 947px | 49.32% |

shrimp rectangle | 403 | 0.51% | 2.44% | 0.07% | 27px | 2.5% | 259px | 23.98% | 78px | 7.27% | 31px | 1.61% | 297px | 15.47% |

jellyfish rectangle | 307 | 0.36% | 1.63% | 0.05% | 25px | 2.31% | 189px | 17.5% | 72px | 6.68% | 27px | 1.41% | 239px | 12.45% |

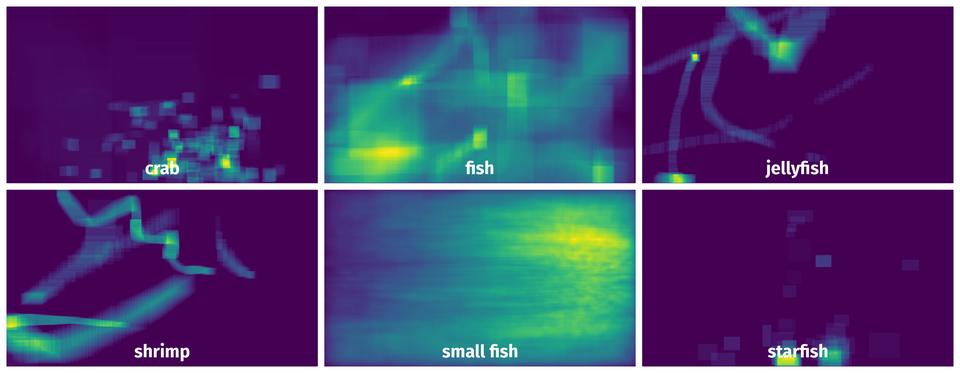

Spatial Heatmap #

The heatmaps below give the spatial distributions of all objects for every class. These visualizations provide insights into the most probable and rare object locations on the image. It helps analyze objects' placements in a dataset.

Objects #

Table contains all 101492 objects. Click a row to preview an image with annotations, and use search or pagination to navigate. Sort columns to find outliers in the dataset.

Object ID ㅤ | Class ㅤ | Image name click row to open | Image size height x width | Height ㅤ | Height ㅤ | Width ㅤ | Width ㅤ | Area ㅤ |

|---|---|---|---|---|---|---|---|---|

1➔ | small fish rectangle | D_brackishMOTSynth-03_000075.jpg | 544 x 960 | 26px | 4.78% | 19px | 1.98% | 0.09% |

2➔ | small fish rectangle | D_brackishMOTSynth-03_000075.jpg | 544 x 960 | 13px | 2.39% | 18px | 1.88% | 0.04% |

3➔ | small fish rectangle | D_brackishMOTSynth-03_000075.jpg | 544 x 960 | 31px | 5.7% | 25px | 2.6% | 0.15% |

4➔ | small fish rectangle | D_brackishMOTSynth-03_000075.jpg | 544 x 960 | 10px | 1.84% | 21px | 2.19% | 0.04% |

5➔ | small fish rectangle | D_brackishMOTSynth-03_000075.jpg | 544 x 960 | 18px | 3.31% | 28px | 2.92% | 0.1% |

6➔ | small fish rectangle | B_brackishMOTSynth-47_000138.jpg | 544 x 960 | 23px | 4.23% | 73px | 7.6% | 0.32% |

7➔ | small fish rectangle | B_brackishMOTSynth-47_000138.jpg | 544 x 960 | 22px | 4.04% | 110px | 11.46% | 0.46% |

8➔ | small fish rectangle | BF_brackishMOTSynth-37_000046.jpg | 544 x 960 | 65px | 11.95% | 44px | 4.58% | 0.55% |

9➔ | small fish rectangle | BF_brackishMOTSynth-37_000046.jpg | 544 x 960 | 41px | 7.54% | 30px | 3.12% | 0.24% |

10➔ | small fish rectangle | BF_brackishMOTSynth-37_000046.jpg | 544 x 960 | 23px | 4.23% | 61px | 6.35% | 0.27% |

License #

BrackishMOT: The Brackish Multi-Object Tracking Dataset is under CC BY-NC-SA 4.0 license.

Citation #

If you make use of the BrackishMOT data, please cite the following reference:

@InProceedings{Pedersen_2023,

author = {Pedersen, Malte and Lehotský, Daniel and Nikolov, Ivan and Moeslund, Thomas B.},

doi = {10.48550/ARXIV.2302.10645},

title = {BrackishMOT: The Brackish Multi-Object Tracking Dataset},

publisher={arXiv},

year={2023}

}

If you are happy with Dataset Ninja and use provided visualizations and tools in your work, please cite us:

@misc{ visualization-tools-for-brackish-mot-dataset,

title = { Visualization Tools for BrackishMOT Dataset },

type = { Computer Vision Tools },

author = { Dataset Ninja },

howpublished = { \url{ https://datasetninja.com/brackish-mot } },

url = { https://datasetninja.com/brackish-mot },

journal = { Dataset Ninja },

publisher = { Dataset Ninja },

year = { 2026 },

month = { feb },

note = { visited on 2026-02-20 },

}Download #

Dataset BrackishMOT can be downloaded in Supervisely format:

As an alternative, it can be downloaded with dataset-tools package:

pip install --upgrade dataset-tools

… using following python code:

import dataset_tools as dtools

dtools.download(dataset='BrackishMOT', dst_dir='~/dataset-ninja/')

Make sure not to overlook the python code example available on the Supervisely Developer Portal. It will give you a clear idea of how to effortlessly work with the downloaded dataset.

The data in original format can be downloaded here.

Disclaimer #

Our gal from the legal dep told us we need to post this:

Dataset Ninja provides visualizations and statistics for some datasets that can be found online and can be downloaded by general audience. Dataset Ninja is not a dataset hosting platform and can only be used for informational purposes. The platform does not claim any rights for the original content, including images, videos, annotations and descriptions. Joint publishing is prohibited.

You take full responsibility when you use datasets presented at Dataset Ninja, as well as other information, including visualizations and statistics we provide. You are in charge of compliance with any dataset license and all other permissions. You are required to navigate datasets homepage and make sure that you can use it. In case of any questions, get in touch with us at hello@datasetninja.com.