Introduction #

The authors built a 3D and RGB door DeepDoors Version 2.0 Dataset with images from several indoor environments using a 3D Realsense camera D435. It is constituted by 3 parts, a 2D and 3D image classifcation part, a semantic segmentation part and an object detection part. Main features:

- The authors propose three different door state classifcation methods, where each one uses different types of information, and all are capable of working in real-time in low-power systems.

- The labelled dataset with RGB and depth images of closed, open and semi-open doors for 2D and 3D state door classifcation.

- The dataset for 2D door segmentation with annotated doors and door frames.

- The dataset for 2D door detection properly annotated.

Motivation

Every day, advancements in technology lead to the creation of new mobile robots equipped with superior components and software tailored for various purposes. These robots serve a multitude of functions, ranging from smart vacuum cleaners and delivery robots to security assistants and nursing aids, along with intelligent housekeeping systems. These innovations aim to alleviate the challenges people face in their daily tasks.

An essential aspect of these intelligent systems is the detection and classification of door states, which typically involve identifying whether a door is closed, open, or partially open (semi-open). This capability is crucial for ensuring the safe navigation of mobile robots within indoor spaces. As these systems often need to maneuver between rooms and interact with doors, providing them with accurate information about door states is imperative to facilitate seamless navigation.

The significance of door state classification extends beyond the realm of mobile robots and robotics. It can also find applications in assisting visually impaired individuals in safely navigating indoor environments. By providing information about the locations of doors and their current states, such technology can empower individuals with visual impairments to move between rooms with confidence and ease.

Dataset description

The authors present three distinct methods for door state classification, each leveraging different types of information: 1) exclusively utilizing 3D data; 2) utilizing both 3D and 2D (RGB) data; and 3) relying solely on 2D (RGB) data. Their primary focus is on developing an approach suitable for low-power systems, such as single-board computers. The emphasis lies in refining door detection and state classification algorithms, independent of other aspects of robot hardware.

While many existing methods primarily concentrate on door detection without delving into state classification, some also incorporate door handle detection to facilitate robot grasping. The authors advocate for a protocol where, upon classifying the door state as closed, the robot initiates a call for human assistance. If the door is detected as open, the robot proceeds to traverse it effortlessly. In cases where the door is identified as semi-open, the robot may either navigate around it or attempt to gently push it open.

The DeepDoors Version 2.0 Dataset comprises three main components: a section dedicated to 2D and 3D image classification, another for semantic segmentation, and a third for object detection. This dataset was curated using a portable system consisting of a Raspberry Pi 3 B+ powered by a power bank, alongside a 3D Realsense Camera (model D435). Notably, this camera boasts a wider horizontal viewing angle (86°) compared to its vertical counterpart (57°). To ensure comprehensive coverage of the door area within the images, the camera was rotated by 90°. Positioned 135 cm above the floor, the camera captured various images of doors and their surroundings, featuring diverse textures, sizes, and potential obstacles such as furniture, individuals, or other objects that partially obscure the door. The objective was to assemble a dataset that reflects real-world scenarios realistically. Additionally, to capture different perspectives of the same door, the camera’s pose was adjusted accordingly. The images were sourced from diverse locations including the authors’ university, public spaces, and private residences. Each image was manually captured using a portable system equipped with a Jetson Nano, and subsequently annotated using the CVAT tool for door segmentation, detection, and state classification.

This dataset is constituted by RGB images and corresponding depth images with a size equal to 480 × 640 pixels. The depth images are in grey-scale with pixels values between 0 and 255 and the authors used a depth scale equal to 1/16. The depth in meters is equal to depth scale * pixel value, for example, if the pixel value is equal to 32 it means that that pixel is 2 m away from the viewer (1∕16 ∗ 32 = 2). In total, this dataset has 3000 door images, 1000 samples for each class: open, closed and semi-open doors. This dataset was randomly split into: 300 samples for validation, 300 for testing and 2400 for training.

Sample images from the DeepDoors Version 2.0 dataset

An advantageous aspect of the authors’ dataset extends beyond its ample sample size—it offers a rich diversity of environments. The dataset comprises images sourced from various settings, including university facilities, residential interiors, countryside homes nestled amidst nature’s beauty. Additionally, the dataset includes numerous blurred images to replicate real-world conditions, along with images featuring obstacles that partially obscure the doors, mirroring practical scenarios.

Summary #

DeepDoors Version 2.0 Dataset is a dataset for instance segmentation, semantic segmentation, object detection, classification, and monocular depth estimation tasks. It is used in the robotics industry.

The dataset consists of 6000 images with 6142 labeled objects belonging to 1 single class (door).

Images in the DeepDoors2 dataset have pixel-level instance segmentation annotations. Due to the nature of the instance segmentation task, it can be automatically transformed into a semantic segmentation (only one mask for every class) or object detection (bounding boxes for every object) tasks. All images are labeled (i.e. with annotations). There are 3 splits in the dataset: train (4800 images), test (600 images), and val (600 images). Alternatively, the dataset could be split into 3 door positions: close (2000 images), open (2000 images), and semi-open (2000 images). Additionally, images are grouped by im_id. The dataset was released in 2021 by the Nova lincs, Portugal and Universidade da Beira Interior, Portugal.

Explore #

DeepDoors2 dataset has 6000 images. Click on one of the examples below or open "Explore" tool anytime you need to view dataset images with annotations. This tool has extended visualization capabilities like zoom, translation, objects table, custom filters and more. Hover the mouse over the images to hide or show annotations.

Class balance #

There are 1 annotation classes in the dataset. Find the general statistics and balances for every class in the table below. Click any row to preview images that have labels of the selected class. Sort by column to find the most rare or prevalent classes.

Class ㅤ | Images ㅤ | Objects ㅤ | Count on image average | Area on image average |

|---|---|---|---|---|

door➔ mask | 6000 | 6142 | 1.02 | 22.35% |

Images #

Explore every single image in the dataset with respect to the number of annotations of each class it has. Click a row to preview selected image. Sort by any column to find anomalies and edge cases. Use horizontal scroll if the table has many columns for a large number of classes in the dataset.

Object distribution #

Interactive heatmap chart for every class with object distribution shows how many images are in the dataset with a certain number of objects of a specific class. Users can click cell and see the list of all corresponding images.

Class sizes #

The table below gives various size properties of objects for every class. Click a row to see the image with annotations of the selected class. Sort columns to find classes with the smallest or largest objects or understand the size differences between classes.

Class | Object count | Avg area | Max area | Min area | Min height | Min height | Max height | Max height | Avg height | Avg height | Min width | Min width | Max width | Max width |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

door mask | 6142 | 21.84% | 91.12% | 0.57% | 14px | 2.19% | 640px | 100% | 469px | 73.32% | 15px | 3.12% | 480px | 100% |

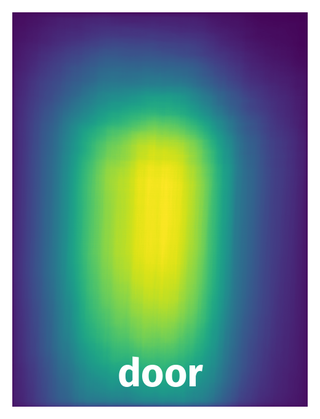

Spatial Heatmap #

The heatmaps below give the spatial distributions of all objects for every class. These visualizations provide insights into the most probable and rare object locations on the image. It helps analyze objects' placements in a dataset.

Objects #

Table contains all 6142 objects. Click a row to preview an image with annotations, and use search or pagination to navigate. Sort columns to find outliers in the dataset.

Object ID ㅤ | Class ㅤ | Image name click row to open | Image size height x width | Height ㅤ | Height ㅤ | Width ㅤ | Width ㅤ | Area ㅤ |

|---|---|---|---|---|---|---|---|---|

1➔ | door mask | Door0864.png | 640 x 480 | 450px | 70.31% | 137px | 28.54% | 15.66% |

2➔ | door mask | Door1080.png | 640 x 480 | 420px | 65.62% | 336px | 70% | 17.4% |

3➔ | door mask | depth_Door0093.png | 640 x 480 | 523px | 81.72% | 215px | 44.79% | 34.59% |

4➔ | door mask | depth_Door1435.png | 640 x 480 | 584px | 91.25% | 215px | 44.79% | 14.79% |

5➔ | door mask | Door0927.png | 640 x 480 | 350px | 54.69% | 143px | 29.79% | 14.42% |

6➔ | door mask | depth_Door0683.png | 640 x 480 | 544px | 85% | 221px | 46.04% | 34.42% |

7➔ | door mask | Door2916.png | 640 x 480 | 532px | 83.12% | 296px | 61.67% | 30.27% |

8➔ | door mask | Door0486.png | 640 x 480 | 397px | 62.03% | 141px | 29.38% | 14.8% |

9➔ | door mask | Door0785.png | 640 x 480 | 450px | 70.31% | 206px | 42.92% | 28.83% |

10➔ | door mask | depth_Door1831.png | 640 x 480 | 397px | 62.03% | 330px | 68.75% | 21.55% |

License #

The DeepDoors Version 2.0 Dataset is publicly available.

Citation #

If you make use of the Deep Doors2 data, please cite the following reference:

@article{article,

author = {Ramôa, João and Lopes, Vasco and Alexandre, Luís and Mogo, Sandra},

year = {2021},

month = {05},

pages = {},

title = {Real-time 2D–3D door detection and state classification on a low-power device},

volume = {3},

journal = {SN Applied Sciences},

doi = {10.1007/s42452-021-04588-3}

}

If you are happy with Dataset Ninja and use provided visualizations and tools in your work, please cite us:

@misc{ visualization-tools-for-deep-doors2-dataset,

title = { Visualization Tools for DeepDoors2 Dataset },

type = { Computer Vision Tools },

author = { Dataset Ninja },

howpublished = { \url{ https://datasetninja.com/deep-doors2 } },

url = { https://datasetninja.com/deep-doors2 },

journal = { Dataset Ninja },

publisher = { Dataset Ninja },

year = { 2026 },

month = { feb },

note = { visited on 2026-02-23 },

}Download #

Dataset DeepDoors2 can be downloaded in Supervisely format:

As an alternative, it can be downloaded with dataset-tools package:

pip install --upgrade dataset-tools

… using following python code:

import dataset_tools as dtools

dtools.download(dataset='DeepDoors2', dst_dir='~/dataset-ninja/')

Make sure not to overlook the python code example available on the Supervisely Developer Portal. It will give you a clear idea of how to effortlessly work with the downloaded dataset.

The data in original format can be downloaded here.

Disclaimer #

Our gal from the legal dep told us we need to post this:

Dataset Ninja provides visualizations and statistics for some datasets that can be found online and can be downloaded by general audience. Dataset Ninja is not a dataset hosting platform and can only be used for informational purposes. The platform does not claim any rights for the original content, including images, videos, annotations and descriptions. Joint publishing is prohibited.

You take full responsibility when you use datasets presented at Dataset Ninja, as well as other information, including visualizations and statistics we provide. You are in charge of compliance with any dataset license and all other permissions. You are required to navigate datasets homepage and make sure that you can use it. In case of any questions, get in touch with us at hello@datasetninja.com.