Introduction #

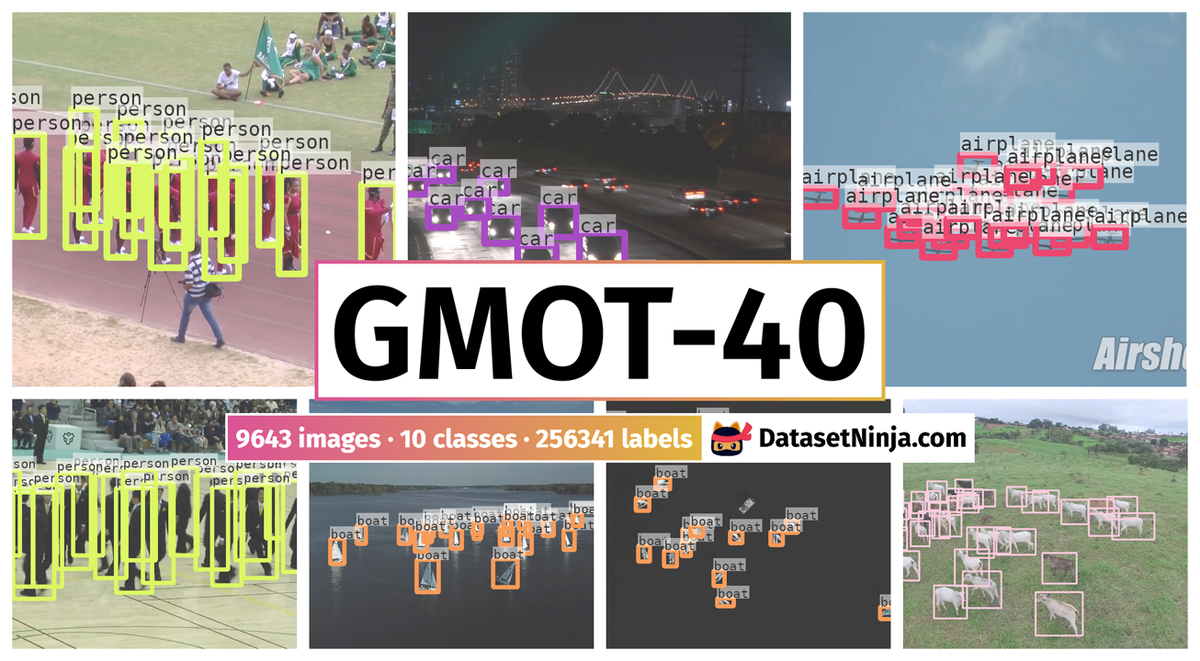

The authors constructed the first publicly available dense GMOT-40: Generic Multiple Object Tracking Dataset, dubbed GMOT-40, which contains 40 carefully annotated sequences evenly distributed among 10 object categories. Each sequence features multiple objects belonging to the same category, with an average of approximately 22 objects per frame. These sequences present various challenges inherent to real-world tracking scenarios, including heavy blur, occlusion, and other complicating factors.

Motivation

The field of Multiple Object Tracking (MOT) has been extensively explored within the computer vision community due to its diverse applications spanning robotics, surveillance, autonomous driving, and cell tracking, among others. Recent advancements in MOT owe much to the refinement of key components such as detection, single object tracking, and association. Additionally, the proliferation of MOT benchmarks has played a significant role in driving progress in the field. However, despite these advancements, previous research in MOT has predominantly focused on specific object categories such as pedestrians, cars, or cells, relying heavily on pre-trained models tailored to these objects. This approach raises questions about the generalizability of existing MOT algorithms to unseen objects, which limits the applicability of MOT to new domains with sparse data for training object detectors. In contrast, Generic Multiple Object Tracking (GMOT) seeks to address these challenges by operating without prior knowledge of the objects being tracked. GMOT holds promise for a wide array of applications including video editing, animal behavior analysis, and vision-based object counting. Despite its broad potential, GMOT remains relatively underexplored, with only limited early investigations conducted thus far. Upon comparing the progress in GMOT with that in MOT, it becomes evident that there is a notable absence of GMOT benchmarks and effective deep learning-based GMOT baselines. This gap underscores the need for further research and development in GMOT, particularly in establishing standardized benchmarks and leveraging deep learning methodologies. It’s important to note that GMOT, as defined by the authors, involves tracking multiple objects belonging to a generic object class. Addressing the above issues, the authors contribute to the study of GMOT in three aspects: dataset, baseline, and evaluation.

Dataset description

The authors have developed GMOT-40, the first publicly available dense dataset for the comprehensive study of Generic Multiple Object Tracking (GMOT). GMOT-40 comprises 40 meticulously curated sequences spanning ten distinct categories, such as insects and balloons, with four sequences allocated per category. Each sequence features multiple objects belonging to the same category, with an average of approximately 22 objects per frame. To ensure accuracy and reliability, all sequences undergo manual annotation with meticulous validation and correction processes. These sequences present various challenges inherent to real-world tracking scenarios, including heavy blur, occlusion, and other complicating factors. To facilitate rigorous evaluation, a specific tracking protocol is adopted to assess different aspects of tracking algorithms. In response to the unique requirements of GMOT, the authors have devised a series of baseline tracking algorithms tailored for one-shot GMOT. These baselines are structured around a two-stage process: the first stage involves one-shot detection, adapted from the GlobalTrack algorithm, while the second stage employs target association techniques drawn from several standard Multiple Object Tracking (MOT) algorithms. In each baseline, the one-shot detection algorithm functions as the public detector. Subsequently, the authors conduct comprehensive evaluations on GMOT-40, involving classic tracking algorithms with necessary adjustments for the GMOT context. The findings of these evaluations underscore the significant potential for improvement in addressing the challenges posed by GMOT, indicating ample opportunities for further advancements in the field.

One-shot generic multiple object tracking (GMOT). (a): The input of one shot generic MOT is a single bounding box to indicate a target template in the first frame. (b): The target template is used to discover and propose all other target candidates of the same category, which is different than model-based MOT where a pretrained detector (typically class-specific) is required. (c): MOT then can be performed on the proposed candidates in either an online or offline manner. Yellow rectangles are zoomed-in local views of targets.

To tackle the issue of generalization observed in prior Multiple Object Tracking (MOT) research, GMOT-40 has been meticulously curated to feature a more comprehensive and diverse dataset. Unlike many previous datasets, which typically encompassed fewer than three categories, GMOT-40 comprises 40 sequences spanning 10 distinct categories. This substantial increase in category diversity is intended to provide a more robust testing ground for MOT algorithms. Within each category, GMOT-40 offers four sequences, carefully crafted to incorporate a wide range of variations and challenges. For instance, the “person” category within GMOT-40 encompasses not only typical instances of individuals as found in datasets like PASCAL-VOC but also introduces a novel category of individuals wearing “wingsuits.” Similarly, the “insect” category presents instances of both “ant” and “bee,” which are not commonly encountered in datasets such as MS-COCO or PASCAL-VOC. This deliberate inclusion of unseen object types within familiar categories serves to enhance the dataset’s capacity for testing the generalization capabilities of MOT algorithms across a broader spectrum of scenarios and object types.

Samples from each category of GMOT-40.

During the selection of sequences for GMOT-40, the authors have placed particular emphasis on including a diverse range of real-world challenges commonly encountered in tracking scenarios. These challenges encompass factors such as occlusion, targets entering or exiting the scene, rapid motion, and motion blur. Furthermore, the density of targets varies across sequences, spanning from 3 to 100 targets per frame, with an average density of approximately 26 targets per frame. These deliberate choices ensure that GMOT-40 encompasses a broad spectrum of scenarios, offering researchers a comprehensive dataset for evaluating tracking algorithms. To ensure the highest quality annotations, each frame within the sequences undergoes meticulous manual annotation to guarantee accuracy. This annotation process involves initial labeling followed by thorough validation and revision to rectify any discrepancies or errors. While additional sequences could further enhance the dataset’s utility, the significant manual effort required for annotation may delay the timely release of the dataset. It is worth noting that GMOT-40 represents a significant advancement over previously available GMOT datasets, offering comprehensive improvements and promising to catalyze further advancements in GMOT research in the future.

| Publication Year | # seq. | # cat. | # tgt. |

|---|---|---|---|

| Luo et al. | 2013 | 4 | 4 |

| Zhang et al. | 2014 | 9 | 9 |

| ≈3 | |||

| Luo et al. | 2014 | 8 | 8 |

| Zhu et al. | 2017 | 3 | 1 |

| Liu et al. | 2020 | 24 | 9 |

| GMOT-40 | 2021 | 40 | 10 |

Comparison of densely annotated data used in GMOT studies.

Data collection

The authors began by identifying 10 object categories likely to exhibit dense and crowded behavior. When choosing video sequences, they stipulated that at least 80% of the frames in a sequence should feature more than 10 targets. While most targets within the same category shared a similar appearance, some exhibited variations in appearance, mirroring real-world scenarios more accurately. They set a minimum sequence length of 100 frames. Once the classes and criteria were established, the authors commenced a search on YouTube for potential candidate videos. Initially, around 1000 sequences were identified as candidates. Following careful examination, 40 sequences were selected for their higher quality and greater challenge. However, it’s important to note that these 40 sequences were not yet ready for annotation. Some sequences contained significant segments unrelated to the task. For instance, in the balloon category, there were sections at the beginning and end focusing on stage performances or festival crowds, which needed to be removed. Consequently, the videos were meticulously edited, and only the best clips with a minimum length of 100 frames were chosen. Ultimately, GMOT-40 boasts an average of 50.65 trajectories per sequence. The entire dataset comprises 9,643 frames, with each sequence averaging 240 frames in length. Approximately 85.28% of the frames feature more than 10 targets. The frames per second (FPS) range from 24 to 30, and the resolution varies from 480p to 1080p.

Data annotation

The annotation format in GMOT follows that of MOT15, with one key distinction: there is no “out-of-view” value, meaning that all bounding boxes in the ground truth file are considered for evaluation protocols. Moreover, only targets within the same category are annotated. For instance, in the “stock” category, only the wolf would be annotated if the initial bounding box indicates that it is the object of interest. Additionally, targets within the same category are treated equally, such as red and white balloons. Ensuring a high-quality GMOT dataset involves manual labeling, double-checking, and error correction. To achieve this, a team of experts, including Ph.D. students, is involved in the annotation process. Each video is initially assigned to a labeler to determine the group of interest. An expert then reviews the target group to ensure it meets the requirements. Once approved, the labeler proceeds with the annotation. The completed annotation undergoes another round of review and potential revision by the experts.

GMOT-40 encompasses a broader range of scenarios and attributes compared to previous GMOT datasets utilized in research. For instance, classes such as person, ball, and insect exhibit characteristics like motion blur and fast motion. Additionally, the appearance of objects in the boat category is significantly influenced by the viewpoint. Moreover, challenges such as low resolution and camera motion are present in the “ball” and livestock categories, respectively. The abbreviation of attributes have the following meaning: CM – camera motion; ROT – target rotation; DEF – target deforms in the tracking; VC – significant viewpoint change that affects the appearance of target; MB – target is blurred due to camera or target motion; FM – fast motion of the targets with displacements larger than the bounding box; LR – target bounding box is smaller than 1024 pixel for at least 30% of the targets in the whole sequences. Although some of the attributes above are present in previous studies of GMOT, yet GMOT-40 is the most comprehensive one, since it is collected from various natural scenes. These miscellaneous attributes of GMOT-40 can help the community to evaluate their trackers from multiple aspects.

Number of sequences for different attributes in the GMOT-40.

Note: the authors did not provide information in the dataset about the belonging of the specified attributes to a specific sequence.

Summary #

GMOT-40: Generic Multiple Object Tracking Dataset is a dataset for object detection and identification tasks. It is used in the surveillance industry.

The dataset consists of 9643 images with 256341 labeled objects belonging to 10 different classes including bird, car, airplane, and other: stock, person, balloon, boat, ball, insect, and fish.

Images in the GMOT-40 dataset have bounding box annotations. All images are labeled (i.e. with annotations). There are no pre-defined train/val/test splits in the dataset. Additionally, every image marked with its sequence tag. Every label contains information about its identity id. Explore it in Supervisely labelling tool. The dataset was released in 2021 by the Temple University Philadelphia, USA, Stony Brook University, USA, and Microsoft Redmond, USA.

Explore #

GMOT-40 dataset has 9643 images. Click on one of the examples below or open "Explore" tool anytime you need to view dataset images with annotations. This tool has extended visualization capabilities like zoom, translation, objects table, custom filters and more. Hover the mouse over the images to hide or show annotations.

Class balance #

There are 10 annotation classes in the dataset. Find the general statistics and balances for every class in the table below. Click any row to preview images that have labels of the selected class. Sort by column to find the most rare or prevalent classes.

Class ㅤ | Images ㅤ | Objects ㅤ | Count on image average | Area on image average |

|---|---|---|---|---|

bird➔ rectangle | 1336 | 27246 | 20.39 | 8.77% |

car➔ rectangle | 1193 | 28097 | 23.55 | 8.5% |

airplane➔ rectangle | 1181 | 23307 | 19.73 | 6.26% |

stock➔ rectangle | 1128 | 34058 | 30.19 | 16.3% |

person➔ rectangle | 1042 | 22650 | 21.74 | 12.19% |

balloon➔ rectangle | 970 | 42775 | 44.1 | 14.08% |

boat➔ rectangle | 836 | 21780 | 26.05 | 13.11% |

ball➔ rectangle | 729 | 20399 | 27.98 | 2.05% |

insect➔ rectangle | 659 | 14107 | 21.41 | 13.76% |

fish➔ rectangle | 569 | 21922 | 38.53 | 35.41% |

Co-occurrence matrix #

Co-occurrence matrix is an extremely valuable tool that shows you the images for every pair of classes: how many images have objects of both classes at the same time. If you click any cell, you will see those images. We added the tooltip with an explanation for every cell for your convenience, just hover the mouse over a cell to preview the description.

Images #

Explore every single image in the dataset with respect to the number of annotations of each class it has. Click a row to preview selected image. Sort by any column to find anomalies and edge cases. Use horizontal scroll if the table has many columns for a large number of classes in the dataset.

Class sizes #

The table below gives various size properties of objects for every class. Click a row to see the image with annotations of the selected class. Sort columns to find classes with the smallest or largest objects or understand the size differences between classes.

Class | Object count | Avg area | Max area | Min area | Min height | Min height | Max height | Max height | Avg height | Avg height | Min width | Min width | Max width | Max width |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

balloon rectangle | 42775 | 0.34% | 18.62% | 0% | 6px | 0.56% | 678px | 62.78% | 65px | 5.98% | 5px | 0.26% | 637px | 33.18% |

stock rectangle | 34058 | 0.78% | 26.96% | 0.01% | 24px | 2.37% | 618px | 57.22% | 102px | 9.75% | 7px | 0.36% | 1073px | 55.89% |

car rectangle | 28097 | 0.37% | 3.8% | 0% | 4px | 0.37% | 240px | 26.81% | 62px | 6.22% | 4px | 0.21% | 293px | 16.48% |

bird rectangle | 27246 | 0.47% | 2.91% | 0.01% | 11px | 1.02% | 247px | 22.87% | 93px | 8.58% | 10px | 0.52% | 300px | 15.62% |

airplane rectangle | 23307 | 0.37% | 1.95% | 0.04% | 23px | 2.13% | 143px | 13.24% | 58px | 5.33% | 28px | 1.46% | 318px | 16.56% |

person rectangle | 22650 | 0.8% | 6.99% | 0% | 3px | 0.28% | 509px | 47.13% | 155px | 14.35% | 3px | 0.16% | 302px | 15.73% |

fish rectangle | 21922 | 1.25% | 13.43% | 0.01% | 11px | 1.02% | 407px | 37.69% | 108px | 10.04% | 6px | 0.31% | 747px | 38.91% |

boat rectangle | 21780 | 0.62% | 6.16% | 0.01% | 12px | 1.11% | 432px | 40% | 125px | 11.56% | 8px | 0.42% | 305px | 15.89% |

ball rectangle | 20399 | 0.08% | 5.33% | 0% | 3px | 0.28% | 328px | 30.37% | 35px | 3.28% | 5px | 0.26% | 347px | 18.07% |

insect rectangle | 14107 | 0.68% | 4.33% | 0.01% | 5px | 0.46% | 316px | 29.26% | 105px | 9.73% | 7px | 0.36% | 411px | 21.41% |

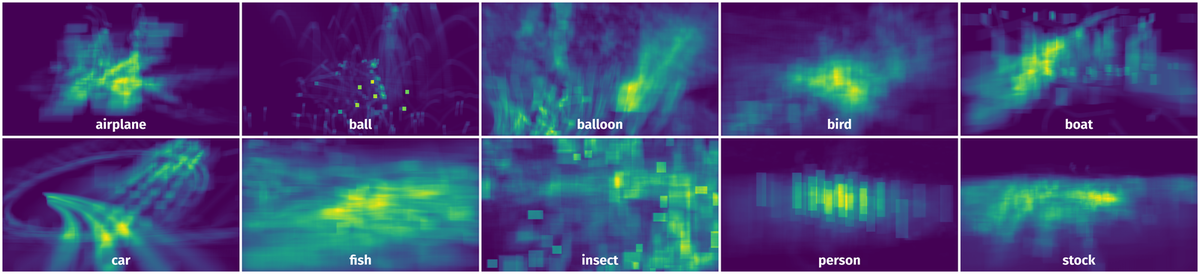

Spatial Heatmap #

The heatmaps below give the spatial distributions of all objects for every class. These visualizations provide insights into the most probable and rare object locations on the image. It helps analyze objects' placements in a dataset.

Objects #

Table contains all 98692 objects. Click a row to preview an image with annotations, and use search or pagination to navigate. Sort columns to find outliers in the dataset.

Object ID ㅤ | Class ㅤ | Image name click row to open | Image size height x width | Height ㅤ | Height ㅤ | Width ㅤ | Width ㅤ | Area ㅤ |

|---|---|---|---|---|---|---|---|---|

1➔ | bird rectangle | bird-3_000328.jpg | 1080 x 1920 | 110px | 10.19% | 121px | 6.3% | 0.64% |

2➔ | bird rectangle | bird-3_000328.jpg | 1080 x 1920 | 79px | 7.31% | 99px | 5.16% | 0.38% |

3➔ | bird rectangle | bird-3_000328.jpg | 1080 x 1920 | 101px | 9.35% | 90px | 4.69% | 0.44% |

4➔ | bird rectangle | bird-3_000328.jpg | 1080 x 1920 | 117px | 10.83% | 102px | 5.31% | 0.58% |

5➔ | bird rectangle | bird-3_000328.jpg | 1080 x 1920 | 118px | 10.93% | 104px | 5.42% | 0.59% |

6➔ | bird rectangle | bird-3_000328.jpg | 1080 x 1920 | 59px | 5.46% | 98px | 5.1% | 0.28% |

7➔ | bird rectangle | bird-3_000328.jpg | 1080 x 1920 | 136px | 12.59% | 87px | 4.53% | 0.57% |

8➔ | bird rectangle | bird-3_000328.jpg | 1080 x 1920 | 110px | 10.19% | 128px | 6.67% | 0.68% |

9➔ | bird rectangle | bird-3_000328.jpg | 1080 x 1920 | 131px | 12.13% | 104px | 5.42% | 0.66% |

10➔ | bird rectangle | bird-3_000328.jpg | 1080 x 1920 | 84px | 7.78% | 65px | 3.39% | 0.26% |

License #

GMOT-40: Generic Multiple Object Tracking Dataset is under CC BY-NC-SA 4.0 license.

Citation #

If you make use of the GMOT-40 data, please cite the following reference:

@inproceedings{bai2021gmot,

title={GMOT-40: A Benchmark for Generic Multiple Object Tracking},

author={Bai, Hexin and Cheng, Wensheng and Chu, Peng and Liu, Juehuan and Zhang, Kai and Ling, Haibin},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={6719--6728},

year={2021}

}

If you are happy with Dataset Ninja and use provided visualizations and tools in your work, please cite us:

@misc{ visualization-tools-for-gmot-dataset,

title = { Visualization Tools for GMOT-40 Dataset },

type = { Computer Vision Tools },

author = { Dataset Ninja },

howpublished = { \url{ https://datasetninja.com/gmot-40 } },

url = { https://datasetninja.com/gmot-40 },

journal = { Dataset Ninja },

publisher = { Dataset Ninja },

year = { 2026 },

month = { feb },

note = { visited on 2026-02-24 },

}Download #

Dataset GMOT-40 can be downloaded in Supervisely format:

As an alternative, it can be downloaded with dataset-tools package:

pip install --upgrade dataset-tools

… using following python code:

import dataset_tools as dtools

dtools.download(dataset='GMOT-40', dst_dir='~/dataset-ninja/')

Make sure not to overlook the python code example available on the Supervisely Developer Portal. It will give you a clear idea of how to effortlessly work with the downloaded dataset.

The data in original format can be downloaded here:

Disclaimer #

Our gal from the legal dep told us we need to post this:

Dataset Ninja provides visualizations and statistics for some datasets that can be found online and can be downloaded by general audience. Dataset Ninja is not a dataset hosting platform and can only be used for informational purposes. The platform does not claim any rights for the original content, including images, videos, annotations and descriptions. Joint publishing is prohibited.

You take full responsibility when you use datasets presented at Dataset Ninja, as well as other information, including visualizations and statistics we provide. You are in charge of compliance with any dataset license and all other permissions. You are required to navigate datasets homepage and make sure that you can use it. In case of any questions, get in touch with us at hello@datasetninja.com.