Introduction #

The authors introduce the LVOS: Long-Term Video Object Segmentation benchmark dataset, comprising 220 videos totaling 421 minutes, representing the first densely annotated long-term video object segmentation (VOS) dataset. The videos within LVOS have an average duration of 1.59 minutes, with each frame meticulously and manually annotated through a semi-automatic annotation pipeline, addressing potential errors in tracking, segmentation, and prediction.

Disclaimer: Dataset is made of 220 sequences, meant to be played as 6-fps videos, but is presented as individual annotated frames. Additionaly, each object instance is presented with an id tag, and a caption tag.

To ensure dataset quality, the authors meticulously select 220 videos from an initial pool of 600 720P-resolution candidate videos, maintaining a balance between video quality and representation. The resulting dataset encompasses 126,280 frames and 156,432 annotations, surpassing the combined size of other datasets. For training, validation, and testing purposes, the videos are partitioned into subsets while preserving distribution and video length characteristics, with 120 videos designated for training, 50 for validation, and 50 for testing. Annotations for training and validation sets are publicly available, fostering the development of VOS methods, while those for the testing set remain private for competition purposes.

Dataset Design

In addressing the absence of a dedicated dataset, LVOS strives to offer the community a new and specialized Video Object Segmentation (VOS) dataset for the training and assessment of resilient VOS models. The construction of LVOS adheres to the three principles outlined below.

-

Long-Term VOS. In the realm of Long-term Video Object Segmentation (VOS), LVOS distinguishes itself from existing datasets, wherein videos typically endure a mere 3-6 seconds. LVOS guarantees a more realistic representation by extending the duration of its videos to approximately 1.59 minutes (equivalent to 574 frames at 6 FPS), which is approximately 20 times longer than the brief duration of short-term videos, aligning more closely with real-world applications.

-

Dense and high-quality annotation. The labor-intensive process of mask annotation significantly limits the temporal scope and size of existing Video Object Segmentation (VOS) datasets. In order to train resilient VOS models and evaluate their effectiveness in practical scenarios, the creation of high-quality and densely annotated masks is imperative. Consequently, all frames within LVOS undergo meticulous manual annotation, facilitated through a semi-automatic annotation pipeline to ensure precision.

-

Comprehensive labeling. A category set with relevance to daily life is meticulously designed, comprising 5 parent classes and 27 subclasses. It is important to highlight that these 27 categories extend beyond the COCO dataset, incorporating additional classes, including, for instance, frisbee. Within the 27 categories, 7 are designated as unseen categories, strategically chosen to enhance the evaluation of model generalization capabilities.

Semi-Automatic Annotation Pipeline

The fatigue induced by the mask annotation process significantly curtails the scale of Video Object Segmentation (VOS) datasets. To address this limitation, the authors introduce an innovative semi-automatic annotation pipeline aimed at enhancing the efficiency of frame annotation. This pipeline is specifically structured into four distinct steps.

Annotation Pipeline, comprising four sequential steps. Step 1: 1 FPS Automatic Segmentation, where instance segmentation and tracking models are employed to automatically obtain the mask of the target object at 1 FPS. In Step 2, 1 FPS Manual Correction is performed, refining the masks obtained in Step 1 through manual adjustments. Step 3, Mask Propagation from 1 FPS to 6 FPS, involves extending the masks from 1 FPS to 6 FPS using a Video Object Segmentation (VOS) model. Finally, Step 4 entails 6 FPS Manual Correction, where the masks obtained in Step 3 are manually corrected.

Step 1: 1 FPS Automatic Segmentation. Initially, the authors employ transfiner to produce pixel-wise segmentation for each object in the frames at a rate of 1 frame per second (FPS). Subsequently, the bounding boxes of the target objects are manually marked when they first appear, and MixFormer is utilized to propagate the box from the initial frame to all subsequent frames. By integrating information from pixel-wise segmentation and bounding boxes in each frame, the masks of the target objects are obtained at a rate of 1 FPS.

Step 2: 1 FPS Manual Correction. Potential inaccuracies or the absence of target object masks in certain frames may arise from tracking errors, segmentation defects, and other prediction errors. To address this, the authors employ EISeg (GitHub), an Efficient Interactive Segmentation Tool based on PaddlePaddle, to refine the masks. On average, around 30% of frames require correction through this refinement process.

Step 3: Mask Propagation. The authors employ a Video Object Segmentation (VOS) model, specifically AOT, to automatically extend the annotation masks obtained at 1 FPS in Step 2 to their adjacent unlabeled frames. This extension results in an increased frame rate from 1 FPS to 6 FPS.

Step 4: 6 FPS Manual Correction. Due to imperfections in masks segmented by the Video Object Segmentation (VOS) model, each frame is artificially corrected until satisfactory results are achieved. Approximately 40% of frames necessitate additional refinement in this step.

Time and Quality Analysis

To assess annotation quality, the authors randomly select 100 videos from the HQYouTubeVIS training set and reannotate them using their semi-automatic annotation pipeline. Comparing the results with the ground truth, the average Intersection over Union (IoU) score is 0.93, indicating substantial consistency between the annotation results and ground truth, thereby validating the effectiveness of the pipeline. Additionally, annotators record the total time overheads, revealing that, on average, one annotator can label an entire long-term video (500 frames at 6 FPS) in 60 minutes using the pipeline, whereas a skilled annotator would spend 1500 minutes using the traditional method (3 minutes for one frame). The pipeline proves to be a significant cost-reduction tool while maintaining annotation quality.

Attributes

Disclaimer: The entirety of the following data is sourced directly from the original paper and regrettably lacks representation in the dataset metadata.

| Attribute | Definition |

|---|---|

| BC | Background Clutter. The appearances of background and target object are similar. |

| DEF | Deformation. Target appearance deforms complexly. |

| MB | Motion Blur. Boundaries of target object are blurred due to camera or object fast motion. |

| FM | Fast Motion. The per-frame motion of the target is larger than 20 pixels, computed as the centroid’s Euclidean distance. |

| LR | Low Resolution. The average ratio between the target box area and the image area is smaller than 0.1. |

| OCC | Occlusion. The target is partially or fully occluded in the video. |

| OV | Out-of-view. The target leaves the video frame completely. |

| SV | Scale Variation. The ratio of any pair of bounding boxes is outside the range [0.5, 2.0]. |

| DB | Dynamic Background. Background regions undergo deformation. |

| SC | Shape Complexity. Boundaries of the target object are complex. |

| AC | Appearance Change. Significant appearance change due to rotations and illumination changes. |

| — | — |

| LRA | Long-term Reappearance. Target object reappears after disappearing for at least 100 frames. |

| CTC | Cross-temporal Confusion. There are multiple different objects that are similar to the target object but do not appear at the same time |

The table provides definitions for video attributes in LVOS, extending and modifying the previously defined short-term video challenges (top). This extension includes a complementary set of long-term video attributes (bottom).

For a thorough and extensive analysis of Video Object Segmentation (VOS) approaches, it is crucial to identify video attributes. Each sequence is labeled with 13 challenges, as outlined in the provided table. These attributes encompass challenges specific to short-term videos, derived from DAVIS, and are augmented with an additional set tailored for long-term videos. Notably, these attributes are not mutually exclusive, allowing for the presence of multiple challenges within a single video. In LVOS, common challenges include Scale Variation (SV), Occlusion (OCC), Low Resolution (LR), and Fast Motion (FM). A notable distinction is observed in challenges between short-term and long-term videos, attributed to the extended length of the latter, resulting in more complex and varied object motion and background changes not readily apparent in short-term videos. The variation in attribute distribution imposes distinct and heightened requirements on the design of VOS models.

| Attributes distribution of each sequence in LVOS | Main mutual dependencies among attributes | Distribution comparison with DAVIS 2017 |

|---|---|---|

Summary #

LVOS: Long-Term Video Object Segmentation is a dataset for instance segmentation, semantic segmentation, object detection, and identification tasks. It is applicable or relevant across various domains.

The dataset consists of 126289 images with 117151 labeled objects belonging to 1 single class (object).

Images in the LVOS dataset have pixel-level instance segmentation annotations. Due to the nature of the instance segmentation task, it can be automatically transformed into a semantic segmentation (only one mask for every class) or object detection (bounding boxes for every object) tasks. There are 31917 (25% of the total) unlabeled images (i.e. without annotations). There are 3 splits in the dataset: train (65237 images), val (31158 images), and test (29894 images). sequence tag defines the split for each video sequence, and is applied to every image. id and caption tags are assigned to every label on an image. The dataset was released in 2023 by the Fudan University, China.

Explore #

LVOS dataset has 126289 images. Click on one of the examples below or open "Explore" tool anytime you need to view dataset images with annotations. This tool has extended visualization capabilities like zoom, translation, objects table, custom filters and more. Hover the mouse over the images to hide or show annotations.

Class balance #

There are 1 annotation classes in the dataset. Find the general statistics and balances for every class in the table below. Click any row to preview images that have labels of the selected class. Sort by column to find the most rare or prevalent classes.

Class ㅤ | Images ㅤ | Objects ㅤ | Count on image average | Area on image average |

|---|---|---|---|---|

object➔ mask | 94372 | 117151 | 1.24 | 3.45% |

Images #

Explore every single image in the dataset with respect to the number of annotations of each class it has. Click a row to preview selected image. Sort by any column to find anomalies and edge cases. Use horizontal scroll if the table has many columns for a large number of classes in the dataset.

Object distribution #

Interactive heatmap chart for every class with object distribution shows how many images are in the dataset with a certain number of objects of a specific class. Users can click cell and see the list of all corresponding images.

Class sizes #

The table below gives various size properties of objects for every class. Click a row to see the image with annotations of the selected class. Sort columns to find classes with the smallest or largest objects or understand the size differences between classes.

Class | Object count | Avg area | Max area | Min area | Min height | Min height | Max height | Max height | Avg height | Avg height | Min width | Min width | Max width | Max width |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

object mask | 117151 | 2.78% | 99.68% | 0% | 1px | 0.14% | 720px | 100% | 188px | 26.06% | 1px | 0.08% | 1280px | 100% |

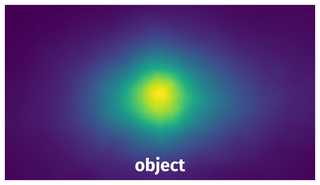

Spatial Heatmap #

The heatmaps below give the spatial distributions of all objects for every class. These visualizations provide insights into the most probable and rare object locations on the image. It helps analyze objects' placements in a dataset.

Objects #

Table contains all 100142 objects. Click a row to preview an image with annotations, and use search or pagination to navigate. Sort columns to find outliers in the dataset.

Object ID ㅤ | Class ㅤ | Image name click row to open | Image size height x width | Height ㅤ | Height ㅤ | Width ㅤ | Width ㅤ | Area ㅤ |

|---|---|---|---|---|---|---|---|---|

1➔ | object mask | xL5OHe3Y_00000001.jpg | 720 x 1280 | 222px | 30.83% | 74px | 5.78% | 1.18% |

2➔ | object mask | TVfoBiU2_00000001.jpg | 720 x 1280 | 357px | 49.58% | 469px | 36.64% | 8.01% |

3➔ | object mask | irsQQbUO_00000001.jpg | 720 x 1280 | 83px | 11.53% | 67px | 5.23% | 0.51% |

4➔ | object mask | 8kv99Cop_00000001.jpg | 720 x 1280 | 49px | 6.81% | 24px | 1.88% | 0.06% |

5➔ | object mask | 8kv99Cop_00000001.jpg | 720 x 1280 | 10px | 1.39% | 69px | 5.39% | 0.06% |

6➔ | object mask | Oj1z5fO0_00000001.jpg | 720 x 1280 | 130px | 18.06% | 209px | 16.33% | 1.42% |

7➔ | object mask | MI0uwoMt_00000001.jpg | 720 x 1280 | 30px | 4.17% | 31px | 2.42% | 0.08% |

8➔ | object mask | irsQQbUO_00000551.jpg | 720 x 1280 | 9px | 1.25% | 48px | 3.75% | 0.04% |

9➔ | object mask | gw84JOqH_00000981.jpg | 720 x 1280 | 232px | 32.22% | 54px | 4.22% | 0.97% |

10➔ | object mask | vlKROEmy_00000886.jpg | 720 x 1280 | 14px | 1.94% | 30px | 2.34% | 0.04% |

License #

Citation #

If you make use of the LVOS data, please cite the following reference:

@InProceedings{Hong_2023_ICCV,

author = {Hong, Lingyi and Chen, Wenchao and Liu, Zhongying and Zhang, Wei and Guo, Pinxue and Chen, Zhaoyu and Zhang, Wenqiang},

title = {LVOS: A Benchmark for Long-term Video Object Segmentation},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {13480-13492}

}

If you are happy with Dataset Ninja and use provided visualizations and tools in your work, please cite us:

@misc{ visualization-tools-for-lvos-dataset,

title = { Visualization Tools for LVOS Dataset },

type = { Computer Vision Tools },

author = { Dataset Ninja },

howpublished = { \url{ https://datasetninja.com/lvos } },

url = { https://datasetninja.com/lvos },

journal = { Dataset Ninja },

publisher = { Dataset Ninja },

year = { 2026 },

month = { feb },

note = { visited on 2026-02-15 },

}Download #

Dataset LVOS can be downloaded in Supervisely format:

As an alternative, it can be downloaded with dataset-tools package:

pip install --upgrade dataset-tools

… using following python code:

import dataset_tools as dtools

dtools.download(dataset='LVOS', dst_dir='~/dataset-ninja/')

Make sure not to overlook the python code example available on the Supervisely Developer Portal. It will give you a clear idea of how to effortlessly work with the downloaded dataset.

The data in original format can be downloaded here.

Disclaimer #

Our gal from the legal dep told us we need to post this:

Dataset Ninja provides visualizations and statistics for some datasets that can be found online and can be downloaded by general audience. Dataset Ninja is not a dataset hosting platform and can only be used for informational purposes. The platform does not claim any rights for the original content, including images, videos, annotations and descriptions. Joint publishing is prohibited.

You take full responsibility when you use datasets presented at Dataset Ninja, as well as other information, including visualizations and statistics we provide. You are in charge of compliance with any dataset license and all other permissions. You are required to navigate datasets homepage and make sure that you can use it. In case of any questions, get in touch with us at hello@datasetninja.com.