Introduction #

The MCubeS: Multimodal Material Segmentation Dataset was created it from RGB, polarization, and near-infrared images. Dataset contains 500 sets of multimodal images capturing 42 street scenes. The capacity to discern materials based on their visual attributes is fundamental for computer vision applications, especially those engaged in real-world scenarios. Material segmentation, requiring meticulous identification of materials at a per-pixel level, poses a substantial challenge. Unlike objects, materials lack clear visual signatures in standard RGB representations. Nonetheless, the distinct radiometric behaviors of diverse materials can be aptly captured through alternative imaging modalities beyond RGB. Each image and pixel within the dataset is meticulously annotated with ground truth information for both material segmentation and semantic segmentation.

While advancements in object recognition empower computers to precisely identify objects within images, the human visual system surpasses mere object identification. It possesses the ability to discriminate between, for example, a paper cup and a ceramic one, enabling thoughtful planning of interactions before physical engagement. If computers could emulate this discernment of object composition, critical decisions could be executed with enhanced speed and accuracy. The imperative task of densely recognizing materials at the pixel level in an image arises. Successful material segmentation offers notable advantages for road scene analysis, allowing autonomous vehicles or advanced driver assistance systems (ADAS) to differentiate between an asphalt road and a concrete one, or discern a leaf on the road from dirt, thereby enhancing control safety.

Despite the substantial strides in object recognition, outdoor material segmentation remains challenging due to the myriad materials encountered in the real world and the scarcity of annotated data. The intricacy of material segmentation is heightened by the lack of well-defined visual features in regular RGB images for materials, as opposed to objects that frequently exhibit distinctive shapes, contours, and surface textures.

Dataset creation

The authorts realize multimodal material segmentation, the recognition of per-pixel material categories from a set of images from the same vantage point but of different imaging modalities. In particular, they consider the combination of regular RGB, polarization, and near-infrared images at each instance. They build an imaging system consisting of a binocular stereo of quad-Bayer RGB polarization cameras, a monocular near-infrared camera, and a LiDAR to capture outdoor road scenes. In addition to materials, the authors also annotated semantic segmentation labels.

Per-pixel recognition of materials from multiple imaging modalities.

MCubeS provides a comprehensive depiction of the visual features of various materials found in everyday outdoor environments, observed from viewpoints situated on roads, pavements, and sidewalks. The primary challenges centered around achieving systematic image capture with precise spatio-temporal alignment and subsequent annotation involving pixel-wise categorization of materials.

In the real world, surfaces composed of diverse materials exhibit distinct subsurface compositions and surface structures at the mesoscopic scale. These differences manifest in varying behaviors of incident light, influencing its reflection, refraction, and absorption. These nuances are particularly discernible in polarization properties, including degrees of linear polarization (DoLP) or angles of linear polarization (AoLP), as well as in the absorption of near-infrared (NIR) light. The MCubeS dataset meticulously captures these intricate radiometric characteristics of diverse materials through a camera system integrating a stereo pair of RGB-polarization (RGB-P) cameras and a near-infrared (NIR) camera. Additionally, the image capture system incorporates LiDAR technology to enhance the accuracy of label propagation.

Example multimodal images from the MCubeS Dataset. Different imaging modalities capture characteristic radiometric behaviors of different materials.

Image Capture System

The researchers devised a customized imaging system, affixing it to a cart to capture multimodal sequences of real-world scenes, replicating a viewpoint akin to that of a car. This system is augmented with a sparse LiDAR, strategically incorporated to facilitate the subsequent propagation of annotations across diverse image modalities. The imaging apparatus comprises a duo of RGB-polarization (RGB-P) cameras (LUCID TRI050S-QC, 2/3-inch sensor), an NIR camera (FLIR GS3-U3-41C6NIR-C, 1-inch sensor), and a LiDAR unit (Livox Mid-100).

Dataset description

The MCubeS dataset comprises two distinct categories of image sequences. The first type involves continuous forward movement of the imaging system, replicating the viewpoint of a moving vehicle. The second type consists of sequences captured at a stationary position while the imaging system pans, facilitating the inclusion of materials such as water, which are infrequently observed in road scenes. This second type of data enhances the diversity of sample images, particularly for less common materials.

The dataset encompasses 42 scenes of the first type (continuous forward movement), sampled at three frames per second, with an average sequence length of 309 seconds. The raw image sequences total 26,650 image sets, from which 424 image sets, evenly spaced in time, were annotated. Additionally, 19 scenes were captured for the second type of data (fixed-location panning). For each scene, eight image sets were acquired, covering a 360-degree view, and a total of 76 image sets from these sequences were annotated. Overall, the dataset includes 500 annotated image sets. The authors defined 20 distinct materials through a thorough examination of the data within the MCubeS dataset.

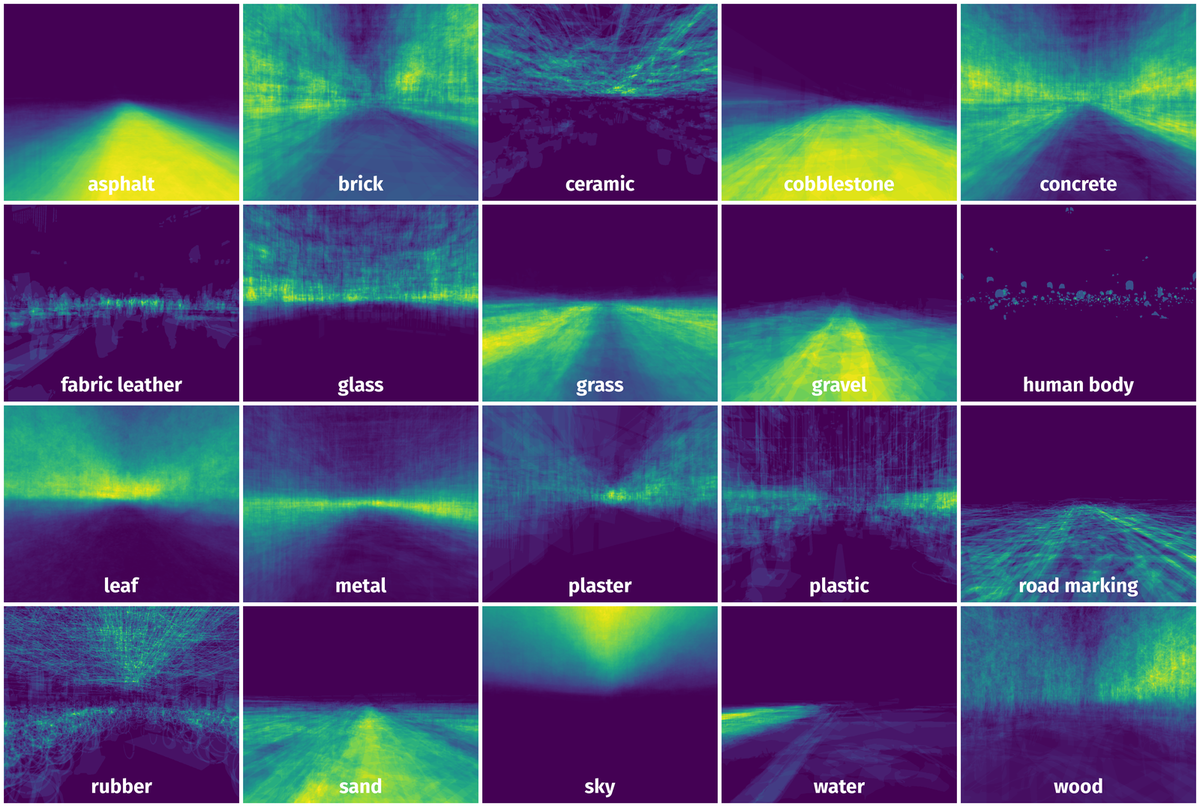

Example regions for each class. The actual images are annotated densely for all pixels. Human body (skin and hair), leaf, grass, and sky are object and stuff names selected to represent their unique materials.

MCubeS scenes predominantly feature roadways and sidewalks, encompassing diverse elements ranging from pavements and roads to various objects like bicycles and manholes. Notably, these elements can be composed of distinct materials, even within the same category. For instance, pavements may be constructed from asphalt, concrete, brick, cobblestone, gravel, or sand. Special attention is given to road markings and manholes, considering them as distinct materials due to their significance in driving scenarios. Vehicles are primarily constructed with metal, rubber, and glass components. Human attire is labeled as fabric, attributes almost exclusive to people. In the case of natural elements, trees and grass are prevalent in the scenes, with wood and leaves designated as material labels for trees (as wood) and fallen leaves (as leaves). water, found in puddles, rivers, and ponds, is another noteworthy material.

The category encompasses materials like ceramic, plaster, plastic, and sky, which, while common, have lesser occurrences or significance in driving scenarios. The authors diligently annotate every image with per-pixel material classes. Initially, they annotate the left RGB image and subsequently propagate per-pixel labels to other views of the same frame. Additionally, a dense depth image for the left RGB-P camera is reconstructed by integrating RGB stereo and LiDAR 3D points.

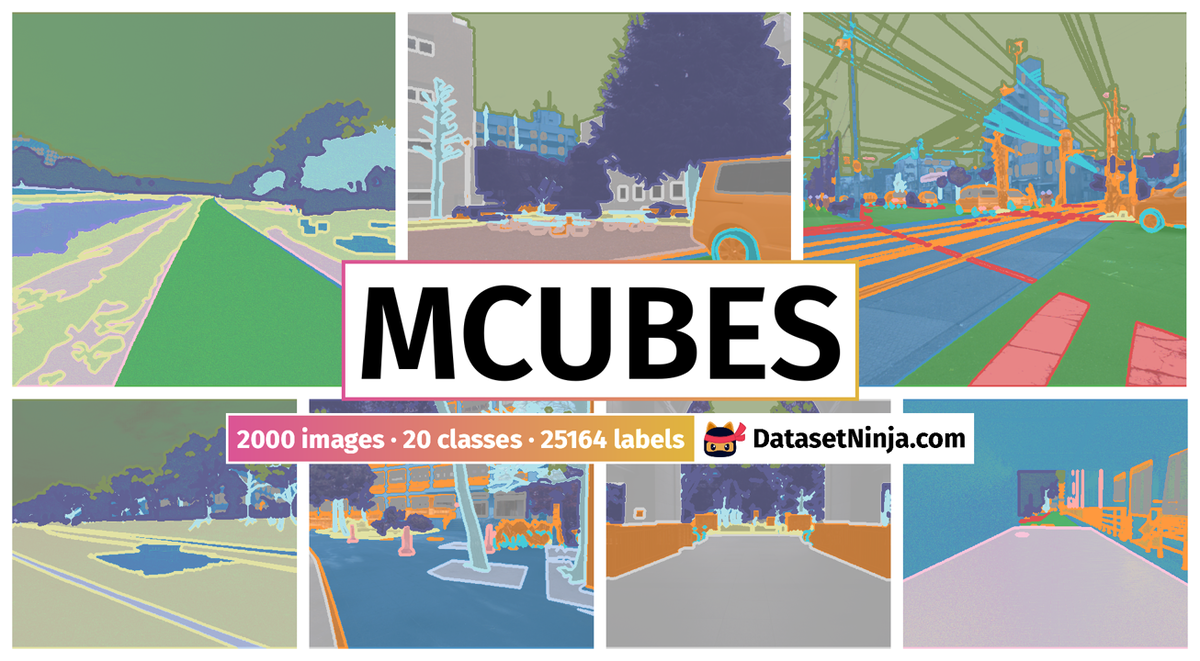

MCubeS dataset spans a wide range of road scenes (top row), including river sidewalks to railroad tracks, each densely annotated with materials (bottom row).

Image modalities

Polarization

Light manifests as a transverse wave, characterized by perpendicular electric and magnetic fields. When the electric field of light lies within a singular plane, it is termed linearly polarized. The orientation angle of polarized light can be defined within the plane perpendicular to the transverse direction. In contrast, unpolarized light comprises electric fields uniformly oriented in all directions.

When the orientations of the electric fields form an ellipse on the plane perpendicular to the transverse direction, the light is considered partially linearly polarized. In this scenario, the major axis of the ellipse defines the angle of polarization, and the ratio of the magnitudes of the major and minor axes is denoted as the degree of polarization. The intensity of partially polarized light, with the angle of linear polarization (AoLP) and the degree of linear polarization (DoLP) measured using a polarization filter, plays a significant role.

Near-infrared Light

Light comprises a spectrum of electromagnetic waves with varying wavelengths, leading to distinctive radiometric behaviors dependent on the wavelength. Objects exhibit diverse colors as their reflection is influenced by wavelength. Simultaneously, the absorption and scattering characteristics of light transmitted into a subsurface or a medium vary based on wavelength. For instance, shorter wavelength light tends to undergo more forward scattering compared to longer wavelength light. The absorption of light experiences pronounced changes, particularly beyond the visual spectrum.

The absorption of light, notably through water—a common element in our daily surroundings, including not just puddles but also natural surfaces like water-containing leaves—is highly sensitive to wavelength. In the near-infrared range spanning from 800nm to 1000nm, the absorption coefficient of light in water exhibits nearly linear growth from 0 to 1. Consequently, for a camera equipped with a near-infrared filter within this wavelength range observing water or water-containing surfaces, varying intensity levels encode the depth or “wetness” of the surface, with darker shades indicating greater depth or wetness.

Summary #

MCubeS: Multimodal Material Segmentation Dataset is a dataset for semantic segmentation and object detection tasks. It is used in the construction and automotive industries.

The dataset consists of 2000 images with 25164 labeled objects belonging to 20 different classes including concrete, metal, leaf, and other: sky, wood, glass, asphalt, rubber, grass, plastic, brick, ceramic, road marking, fabric leather, human body, sand, cobblestone, plaster, water, and gravel.

Images in the MCubeS dataset have pixel-level semantic segmentation annotations. All images are labeled (i.e. with annotations). There are 3 splits in the dataset: train (1208 images), test (408 images), and val (384 images). Additionally, images are grouped by im id (total 500 images groups). Also every image contains information about its scene (total 42 scenes). Explore it in supervisely labeling tool. The dataset was released in 2022 by the Graduate School of Informatics, Kyoto, Japan.

Explore #

MCubeS dataset has 2000 images. Click on one of the examples below or open "Explore" tool anytime you need to view dataset images with annotations. This tool has extended visualization capabilities like zoom, translation, objects table, custom filters and more. Hover the mouse over the images to hide or show annotations.

Class balance #

There are 20 annotation classes in the dataset. Find the general statistics and balances for every class in the table below. Click any row to preview images that have labels of the selected class. Sort by column to find the most rare or prevalent classes.

Class ㅤ | Images ㅤ | Objects ㅤ | Count on image average | Area on image average |

|---|---|---|---|---|

concrete➔ mask | 1996 | 1996 | 1 | 13.94% |

metal➔ mask | 1960 | 1960 | 1 | 7.66% |

leaf➔ mask | 1940 | 1940 | 1 | 12.95% |

sky➔ mask | 1888 | 1888 | 1 | 16.2% |

wood➔ mask | 1844 | 1844 | 1 | 4.63% |

glass➔ mask | 1780 | 1780 | 1 | 1.99% |

asphalt➔ mask | 1580 | 1580 | 1 | 22.6% |

rubber➔ mask | 1488 | 1488 | 1 | 0.9% |

grass➔ mask | 1484 | 1484 | 1 | 6.91% |

plastic➔ mask | 1380 | 1380 | 1 | 0.77% |

Co-occurrence matrix #

Co-occurrence matrix is an extremely valuable tool that shows you the images for every pair of classes: how many images have objects of both classes at the same time. If you click any cell, you will see those images. We added the tooltip with an explanation for every cell for your convenience, just hover the mouse over a cell to preview the description.

Images #

Explore every single image in the dataset with respect to the number of annotations of each class it has. Click a row to preview selected image. Sort by any column to find anomalies and edge cases. Use horizontal scroll if the table has many columns for a large number of classes in the dataset.

Object distribution #

Interactive heatmap chart for every class with object distribution shows how many images are in the dataset with a certain number of objects of a specific class. Users can click cell and see the list of all corresponding images.

Class sizes #

The table below gives various size properties of objects for every class. Click a row to see the image with annotations of the selected class. Sort columns to find classes with the smallest or largest objects or understand the size differences between classes.

Class | Object count | Avg area | Max area | Min area | Min height | Min height | Max height | Max height | Avg height | Avg height | Min width | Min width | Max width | Max width |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

concrete mask | 1996 | 13.94% | 57.35% | 0.13% | 11px | 1.07% | 1024px | 100% | 694px | 67.81% | 168px | 13.73% | 1224px | 100% |

metal mask | 1960 | 7.66% | 52.61% | 0% | 5px | 0.49% | 1024px | 100% | 631px | 61.59% | 5px | 0.41% | 1224px | 100% |

leaf mask | 1940 | 12.95% | 54.11% | 0.02% | 27px | 2.64% | 1024px | 100% | 496px | 48.45% | 18px | 1.47% | 1224px | 100% |

sky mask | 1888 | 16.2% | 43.8% | 0.02% | 17px | 1.66% | 546px | 53.32% | 388px | 37.91% | 28px | 2.29% | 1224px | 100% |

wood mask | 1844 | 4.63% | 45.73% | 0.01% | 9px | 0.88% | 1024px | 100% | 428px | 41.76% | 5px | 0.41% | 1224px | 100% |

glass mask | 1780 | 1.99% | 14.01% | 0% | 4px | 0.39% | 689px | 67.29% | 335px | 32.7% | 11px | 0.9% | 1224px | 100% |

asphalt mask | 1580 | 22.6% | 47.17% | 0% | 2px | 0.2% | 638px | 62.3% | 411px | 40.16% | 10px | 0.82% | 1224px | 100% |

rubber mask | 1488 | 0.9% | 6.01% | 0% | 2px | 0.2% | 1024px | 100% | 306px | 29.83% | 3px | 0.25% | 1224px | 100% |

grass mask | 1484 | 6.91% | 44.62% | 0.01% | 10px | 0.98% | 614px | 59.96% | 274px | 26.78% | 9px | 0.74% | 1224px | 100% |

plastic mask | 1380 | 0.77% | 11.7% | 0% | 2px | 0.2% | 973px | 95.02% | 255px | 24.88% | 3px | 0.25% | 1224px | 100% |

Spatial Heatmap #

The heatmaps below give the spatial distributions of all objects for every class. These visualizations provide insights into the most probable and rare object locations on the image. It helps analyze objects' placements in a dataset.

Objects #

Table contains all 25164 objects. Click a row to preview an image with annotations, and use search or pagination to navigate. Sort columns to find outliers in the dataset.

Object ID ㅤ | Class ㅤ | Image name click row to open | Image size height x width | Height ㅤ | Height ㅤ | Width ㅤ | Width ㅤ | Area ㅤ |

|---|---|---|---|---|---|---|---|---|

1➔ | asphalt mask | dolp_outscene1212_5_0000000120.png | 1024 x 1224 | 516px | 50.39% | 1224px | 100% | 32.21% |

2➔ | concrete mask | dolp_outscene1212_5_0000000120.png | 1024 x 1224 | 1024px | 100% | 1224px | 100% | 4.51% |

3➔ | metal mask | dolp_outscene1212_5_0000000120.png | 1024 x 1224 | 1024px | 100% | 1224px | 100% | 13.95% |

4➔ | road marking mask | dolp_outscene1212_5_0000000120.png | 1024 x 1224 | 477px | 46.58% | 240px | 19.61% | 1.31% |

5➔ | glass mask | dolp_outscene1212_5_0000000120.png | 1024 x 1224 | 253px | 24.71% | 865px | 70.67% | 0.39% |

6➔ | plaster mask | dolp_outscene1212_5_0000000120.png | 1024 x 1224 | 85px | 8.3% | 317px | 25.9% | 0.36% |

7➔ | plastic mask | dolp_outscene1212_5_0000000120.png | 1024 x 1224 | 116px | 11.33% | 60px | 4.9% | 0.16% |

8➔ | rubber mask | dolp_outscene1212_5_0000000120.png | 1024 x 1224 | 436px | 42.58% | 897px | 73.28% | 1% |

9➔ | ceramic mask | dolp_outscene1212_5_0000000120.png | 1024 x 1224 | 73px | 7.13% | 291px | 23.77% | 0.18% |

10➔ | brick mask | dolp_outscene1212_5_0000000120.png | 1024 x 1224 | 597px | 58.3% | 1224px | 100% | 6.96% |

License #

Citation #

If you make use of the Multimodal Material Segmentation data, please cite the following reference:

@InProceedings{Liang_2022_CVPR,

author = {Liang, Yupeng and Wakaki, Ryosuke and Nobuhara, Shohei and Nishino, Ko},

title = {Multimodal Material Segmentation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {19800-19808}

}

If you are happy with Dataset Ninja and use provided visualizations and tools in your work, please cite us:

@misc{ visualization-tools-for-multimodal-material-segmentation-dataset,

title = { Visualization Tools for MCubeS Dataset },

type = { Computer Vision Tools },

author = { Dataset Ninja },

howpublished = { \url{ https://datasetninja.com/multimodal-material-segmentation } },

url = { https://datasetninja.com/multimodal-material-segmentation },

journal = { Dataset Ninja },

publisher = { Dataset Ninja },

year = { 2026 },

month = { feb },

note = { visited on 2026-02-26 },

}Download #

Dataset MCubeS can be downloaded in Supervisely format:

As an alternative, it can be downloaded with dataset-tools package:

pip install --upgrade dataset-tools

… using following python code:

import dataset_tools as dtools

dtools.download(dataset='MCubeS', dst_dir='~/dataset-ninja/')

Make sure not to overlook the python code example available on the Supervisely Developer Portal. It will give you a clear idea of how to effortlessly work with the downloaded dataset.

The data in original format can be downloaded here.

Disclaimer #

Our gal from the legal dep told us we need to post this:

Dataset Ninja provides visualizations and statistics for some datasets that can be found online and can be downloaded by general audience. Dataset Ninja is not a dataset hosting platform and can only be used for informational purposes. The platform does not claim any rights for the original content, including images, videos, annotations and descriptions. Joint publishing is prohibited.

You take full responsibility when you use datasets presented at Dataset Ninja, as well as other information, including visualizations and statistics we provide. You are in charge of compliance with any dataset license and all other permissions. You are required to navigate datasets homepage and make sure that you can use it. In case of any questions, get in touch with us at hello@datasetninja.com.